A Declarative Orchestration Framework: GitBridge in Orchestra

.yml based DSL ontop of everything you would have written anyway

As Data Teams shift from providing services to providing a data platform anyone can use, I want to share a common anecdote that’s heard time and time again.

“We started using Airflow for our orchestration. It worked fine, and then we realised we needed a platform engineer to maintain the infrastructure and some of the operators weren’t ideal, so we built our own. There was no alerting, so we built an alerting service too. Airflow is metadata-agnostic, so there was no centralisation of metadata, so we built pipelines to fetch it and dashboards to monitor the pipelines’ metadata. Not having a catalog was a real pain so we invested some time implementing logging and metadata collection within Airflow itself to give us observability in datadog and our data catalog. All while this was going on we had business users clamouring for access to data pipelines - but we couldn’t give them access to Airflow, that would be silly! So we built a .yml based DSL around it so anyone can build pipelines! It’s been 4 years. We have a data platform team of about 20/25, Snowflake bill is huge, about 15 Airflow instances. It ain’t much but it’s honest work”

Our vision at Orchestra has been to provide a flexible but powerful platform that effectively does everything above. A huge objection we’ve always got is “well look the GUI is nice but I want to be able to edit the code myself and git-control it”

Fair objection. After all, you can do that in all the other options.

We did always find it interesting as to why, though. After all, Orchestra forces you to do lightweight orchestration, meaning the business logic of how you ingest, transform, surface data etc. is somewhere else - somewhere that WOULD always be git-controlled. Do you really need to git-control and CI/CD check the logic A→B—>C? After all, there’s version control in the portal already.

Something we’ve learned is the answer to that question is “probably not but we want to do it anyway as we want to adhere to best practices” which is totally fair. I guess by starting with not exposing the code it forced us to really ask why people want to do it.

The second point - why do you want to edit the code directly? is an interesting one. The GUI is designed to be the fastest possible way to build pipelines of a certain ilk. However, programmatically creating pipelines, creating many many pipelines, or writing pipelines with many repeated tasks.

This raises an important question for data platform leads - which is that if your orchestrator can do everything, and you can use AI or some cheap resource to create lots of code then you basically don’t need another tool.

After all, an observability tool is basically just a UI ontop of a shed load of SQL - something you can probably write and version control in-house. After all, if all those SQL statements running every 15 minutes are truly the reason you identify all those mission-critical errors, then perhaps you should be git controlling them and having an audit trail so that if someone turns one off, you know who to shout at.

Anyway, Orchestra has git control now and I think it’s a really good illustration of the work many data teams need to go through and how Orchestra helps. Many of us data folks have a vision of what good looks like, this is a nice step towards it. PR below.

Introduction

Today we’re excited to announce one of Orchestra’s biggest ever features — GitBridge.

GitBridge is a bi-directional sync in between the Orchestra UI and your Git Provider. By providing this capability, Data Teams can get all the benefits of git while leveraging the power of the Orchestra UI to rapidly build data pipelines.

Data Teams can now

Directly edit the code generated by the Orchestra UI

Create different branches using Git that are synchronised with the Builder View in Orchestra

Collaborate together on the same pipelines

Store an audit trail of user-level commits

Review scheduling and orchestration changes in PR Review

Implement CI/CD checks as part of pipeline deployment.

This is a huge development for us as a team. We have always wanted to provide data teams with a best-in-class developer experience for building data pipelines. As such, the core pipeline has always been code-first using .yml files which were not editable or viewable to the user until now.

A common objection we received is that legacy orchestration frameworks are code first and although they require teams to write a lot of code, obviously facilitate robust developer lifecycles, git-control, and CI/CD.

With GitBridge, we’re now able to position Orchestra on par with legacy orchestration frameworks from the developer perspective.

There is still a bit to do - Orchestra currently does not support environments which is desirable for companies running true separate staging and production environments. We expect to support these by mid Q1 2025, alongside more sophisticated CI checks.

We are very excited to be releasing this new functionality and supporting data teams who are looking to combine agility and speed with software engineering best practices.

To check-out the flow, sign-up to the Free Tier. To Read more about how to get set-up, keep on reading.

How do you set-up Orchestra with your Git provider?

One of the most important parts of software engineering is ensuring there is an audit trail for change tracking. It is imperative to understand who changed what where and when.

To facilitate this, Orchestra requires two git connections; one to your Git Organisation and one per User. This means that when individual users (in Orchestra) make changes, these come from their accounts, which enables user-level commit history tracking.

Github Organisation connection in Orchestra

Head to Settings -> Account and select GitHub. You will be redirect to GitHub, where you are prompted to select an organisation. Select your organisation's account (not your personal GitHub).

Select the repositories where Orchestra pipelines will be stored and continue. You will be redirected to Orchestra:

User connection

If you are the user that configured the organisation connection, your user connection is configured automatically. Changes committed from this Orchestra account will correspond to the relevant GitHub account that was used to authenticate your GitHub Organisation.

For other users in the account who wish to edit pipelines stored in Git, you will be prompted to login to your GitHub Organisation with your GitHub user account:

How do you build Pipelines that are stored in Git using Orchestra?

Before starting, ensure you have a repository in mind where you will store the pipeline.yml file. Make a note of the location (typically your organisation name) and the name of the repo e.g. organisation/orchestra.

Option 1 — creating a pipeline in Orchestra

To create a pipeline in Orchestra, head to the home page and select new pipeline:

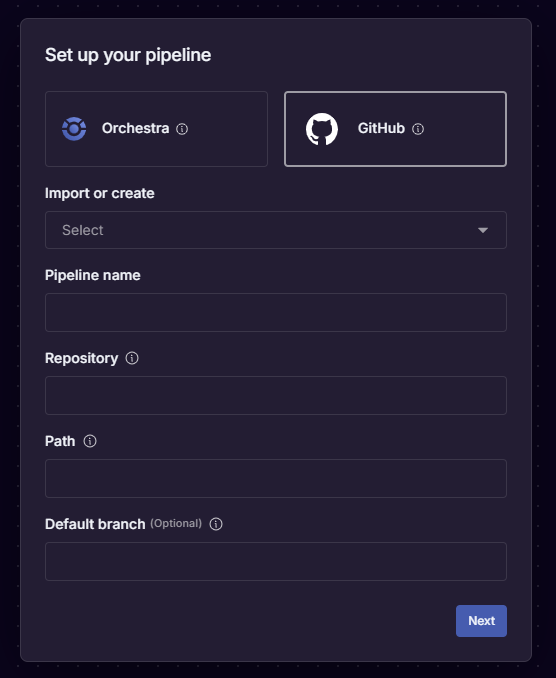

You will be asked to choose if the pipeline should be Orchestra or Git-Backed. Select ‘Git-Backed’:

Import or Create: Select Create

Pipeline name: Choose a succinct name for the pipeline

Repository: The location of your repository e.g.

organisation/orchestraPath: The complete path for the file location. For example, to store a file called

pipeline.ymlin the root directory, enterpipeline.yml. To store it in a folder calledpipelinesenterpipelines/pipeline.yml. The file must not already exist in the repositoryDefault branch: The default branch you wish to allow Orchestra to use for running pipelines in Production. Note: this does not have to match the default branch configured in your Git provider

Working branch: (optional) When developing, you should work in a separate branch to the default branch. Choose a name for this branch e.g.

orchestra_dev, or select an existing branch

After this, create a base pipeline in the UI with any of your components:

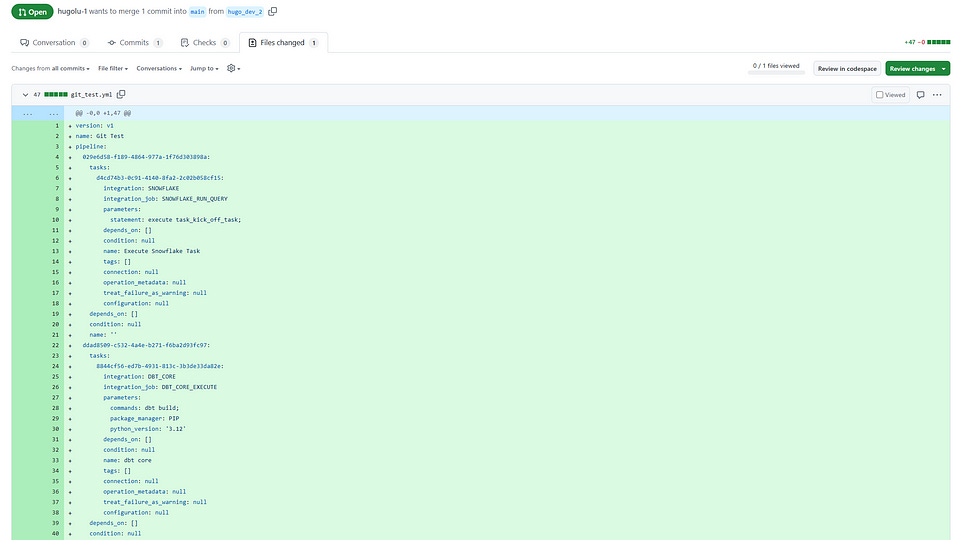

Upon clicking “Save”, Orchestra will push these changes to the working branch defined (if not provided, Orchestra will attempt to push to the default branch):

The development branch can now be used to create a pull request, as if you had written the .yml code locally! With this configuration complete, you are now ready to configure CI/CD.

Option 2 — creating a pipeline locally

We recommend you use the editor to generate the .yml in the Orchestra UI first. This will allow you to see the relevant schema for different tasks. After you’ve done this, you can select the Download YAML button in the top right hand corner.

This is by no means necessary. For example, when we release the .yml checker it will be trivial to use AI to generate the .yml directly in the code editor (from scratch), or, knowing the schema, to create pipelines programmatically.

Downloading the .yml file from Orchestra

Commit this file to your git repository and follow the process required to publish this to your preferred default branch. The pipeline.yml file may look something like this:

version: v1

name: 'Internal: end-to-end pipeline [dbt only]'

pipeline:

3576067f-bb91-4cb0-9515-cf5df23214b3:

tasks:

f192bbb7-5049-42b1-9bca-643b3aa2502e:

integration: DBT_CORE

integration_job: DBT_CORE_EXECUTE

parameters:

commands: 'dbt seed;

dbt build --select models tag:daily;'

package_manager: PIP

python_version: '3.12'

depends_on: []

condition: null

name: execute dbt

tags: []

connection: data_dbt_bigquery_48170

operation_metadata:

63c3c660-f780-494d-b460-4b1d65bf5716:

s3_bucket_name: null

s3_key_prefix: null

dbt_warehouse_identifier: null

integration: GCP_BIG_QUERY

connection: internal_dbt_metadata_52100

treat_failure_as_warning: null

configuration: null

depends_on: []

condition: null

name: ''

f45ffc46-88f8-497b-a630-d341a92ec00c:

tasks:

f93256bc-7b60-488b-9664-3c4616c8285d:

integration: LIGHTDASH

integration_job: LIGHTDASH_REFRESH_DASHBOARD

parameters:

dashboard_id: 23e12d2e-6b3c-43e7-9cf6-fab399*****

depends_on: []

condition: null

name: Refresh Lightdash

tags: []

connection: null

operation_metadata: null

treat_failure_as_warning: null

configuration: null

depends_on:

- 3576067f-bb91-4cb0-9515-cf5df23214b3

condition: null

name: ''

schedule:

- name: Daily at 8am (UTC)

cron: 0 5 ? * * *

timezone: UTC

trigger_events: []

webhook:

enabled: false

operation_metadata: null

configuration:

retries: 2

timeout: null

Once that is done, you can head to Orchestra and create a new pipeline. When you choose “Git-Backed”, make sure you select “Import” rather than “Create”:

Now your pipeline will be connected to the relevant file in git and you can continue editing in Orchestra / Git. Orchestra will ensure both are kept in sync.

Option 3 — store in Orchestra

When creating a pipeline, you can choose for a pipeline to be “Orchestra-backed”. This means that instead of storing the pipeline.yml files and changes in your git provider in different branches, Orchestra handles this itself. This feature supports versioning, rollbacks and role-based access control for review (i.e. only certain roles can publish changes). This is a great option when getting started with Orchestra, and does not preclude a move to making a pipeline Git-Backed (you can simply download the .yml file, and move it to your Git Repo later).

FAQ

Can I migrate a pipeline between Orchestra and Git?

Yes — you can either download the .yml file via the UI, and commit it to your git repository, or change the pipeline settings in Orchestra to use a different storage provider.

If I update the schedule in Git, is it updated in Orchestra automatically?

Yes — Orchestra will listen to file updates for the relevant pipeline files and update schedules and pipeline triggers accordingly.

Can I connect to multiple Git organisations in one account?

Currently, we only support a direct one to one mapping between Orchestra account and Git organisation.

I am getting errors when performing actions on pipelines that I did not get when I was storing pipelines in Orchestra — what could be the issue?

Check the organisation connection has sufficient permissions to read and write to all the repositories it needs to access.

Orchestra may be unable to write updates to protected branches — Orchestra will show the protected statuses of branches.

If a file is updated locally, and being edited in the UI, a conflict may be possible. Refresh the page and begin editing again.

Find out more about Orchestra

Orchestra is a unified control plane for Data and AI Operations.

We help Data Teams spend less time maintaining infrastructure, make them proactive instead of reactive, and ultimately win trust in data and AI from the Business.

We do this by consolidating Orchestration with monitoring, data quality testing, and data discovery. You don’t need an observability, lineage, catalog etc. with Orchestra.

Check out