Announcing Nimble: end-to-end AI-enabled web scraping with Orchestra

Web scraping has never been as easy, powerful or AI enabled

Substack note

Feel free to skip the article below but I want to take a note to describe some interesting developments in the web scraping industry.

For years, scraping data from the web has been a pretty tricky manual exercise. This is because of problems like IP Blocking, Web page structure changes, and complicated HTML parsing.

There are now vendors such as Nimble and others who are building managed solutions for webscraping. Advances in AI have allowed them to proliferate, meaning that now we no longer require large in-house webscraping teams to effectively turn the internet into a data source.

Webscraping is something everyone should do. For example, it’s a well-known fact that Apollo.io, the successful lead generation SAAS Company, effectively built it’s reputation through a combination of scraping individual persona data from the internet and using this to power SEO on individuals - they subsequently became the leader in this space.

Of course, even with advances in Open AI running webscraping pipelines still requires quite a lot of effort, and definitely a data engineer, because of the orchestration and infrastructure. You need to run your web scraping job, and then you need to trigger and monitor all your downstream stuff afterwards.

That’s why I think building an integration to a platform like Nimble really unlocks a lot of possibilities for Data Teams interested in expansive, “aggressive” / value-accretive actions.

If you’re interested in reading more about web scraping in detail, you can see a few additional articles here:

Web scraping with AI opportunity - link

Web scraping real-time pricing data in retail - link

Leveraging web scraping for intelligence gathering - link

We’re really excited about how advances in Generative AI have made the process of scraping data from the web more robust. We’re happy to democratise this to data teams of all sizes. For the full press release, read on below. If you’re ready to try it out, hit the button:

Introduction

We’re extremely excited to announce our integration and partnership with Nimble — the Artificial Intelligence (“AI”) enabled web-scraping API.

With this integration, Orchestra users can leverage the power of Nimble’s API to gather data from the internet at an unprecedented accuracy, speed and reliability.

This represents a significant innovation in the field of web-scraping, where simple changes in HTML-code structure have proven to be a constant thorn in the side of Data teams for the past 20 years.

Why you should be webscraping

There are over 50 billion pages on the internet — that’s a lot of data. While incredibly valuable, this information is hard to access for production systems. Changes in HTML-code structure, the necessity of proxy network management, and long-running infrastructure requirements mean scraping data at scale is often a difficult task and not for the faint-hearted.

To this point, many Intelligence or Research providers, Hedge Funds, and software vendors in the travel market such as Booking.com have historically had scores of developers whose role is to manage the infrastructure to scrape data at scale. This powers use-cases such as real-time price intelligence, real-time weather updates, search engine optimisation monitoring and the monitoring of reviews.

By combining Nimble with Orchestra, anybody working in Data (technical or otherwise) can leverage Nimble for these types of use-cases. This unlocks an enormous range of hitherto inaccessible opportunities for deriving value from data — for teams of any size.

How does Nimble work?

Nimble is an AI-enabled API for webscraping. Nimble manages problems such as proxy management, concurrency, and HTML-parsing. This means users need only define the data source via a URL or list of URLs, provide credentials to storage options (Amazon S3 or Google Cloud Storage (“GCS”)), and execute jobs on a schedule in order to gather data from the web at scale.

This requires users to write and execute [python] code, while asynchronously monitoring Nimble’s API to poll for job completion.

Upon job completion, additional tasks such as JSON flattening or Machine learning / Generative AI model training can be undertaken. Another popular use-case is to move this data from Object storage (S3/GCS) to a data warehouse environment following some basic transformation, for use in dashboards or other analytical use-cases.

An example of how you might design such a data pipeline using an Open-Source Workflow Orchestration tool such as Airflow is below.

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from bs4 import BeautifulSoup

import requests

import json

from datetime import datetime

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'start_date': datetime(2024, 5, 8),

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

}

def scrape_website():

# Scraping the website

url = 'YOUR_WEBSITE_URL_HERE'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Extracting data

data = []

for item in soup.find_all('YOUR_HTML_TAG'):

# Extract whatever data you need from each item

# Example:

title = item.find('YOUR_TITLE_TAG').text.strip()

description = item.find('YOUR_DESCRIPTION_TAG').text.strip()

link = item.find('YOUR_LINK_TAG')['href']

# Create a dictionary for each item

item_data = {

'title': title,

'description': description,

'link': link

# Add more fields as needed

}

data.append(item_data)

return data

def flatten_json():

# Getting scraped data

scraped_data = scrape_website()

# Flattening data

flattened_data = []

for item in scraped_data:

flattened_item = {

'title': item['title'],

'description': item['description'],

'link': item['link']

# Flatten more fields if needed

}

flattened_data.append(flattened_item)

# Writing flattened data to JSON file

with open('/path/to/output.json', 'w') as json_file:

json.dump(flattened_data, json_file)

with DAG('webscraper_dag',

default_args=default_args,

description='A DAG to scrape a website and flatten JSON',

schedule_interval='@daily') as dag:

scrape_and_flatten = PythonOperator(

task_id='scrape_and_flatten',

python_callable=flatten_json

)

scrape_and_flatten()Benefits of Orchestra + Nimble

Orchestra’s integration makes this process significantly easier. We’ve built a market-leading integration with Nimble that means users need only define the frequency, data type, and storage location in order to get started.

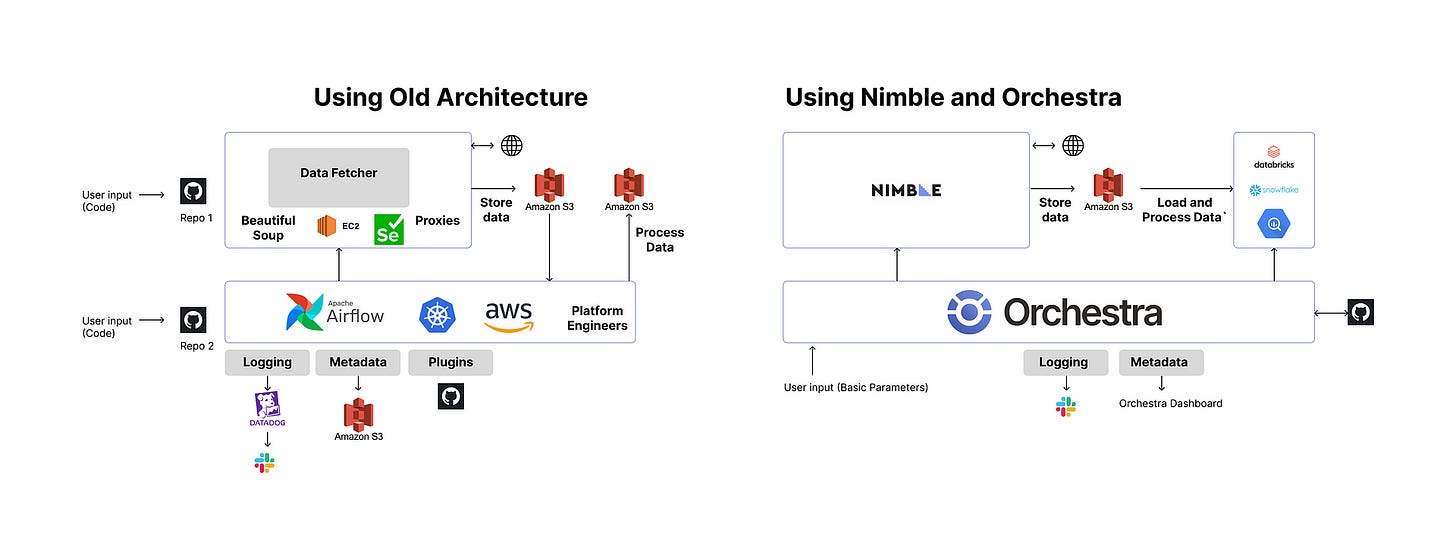

This means there is minimal learning curve required to make full-use of Nimble’s AI-web scraping abilities, in stark contrast to trying to scrape the web at scale using a combination of Beautiful Soup, Selenium, and own-managed infrastructure.

Secondly, by handling the execution, monitoring and alerting for the end-to-end data pipeline, Orchestra can save hundreds of engineer hours writing boilerplate infrastructure required to robustly and efficiently interact with Nimble’s API. The Architecture comparison diagram illustrates this below:

Finally, leveraging Orchestra is the most reliable way to ensure data is scraped accurately and consistently. By integrating deeply with Nimble’s API and handling mechanisms such as retries and timeouts, Orchestra provides an additional layer of defence against DAG failure.

It should also go without saying that integrating Nimble into Orchestra’s data lineage and Data Asset Graph brings is extremely valuable. Orchestra’s engine is context/asset-aware, which ensures files scraped using Nimble render as Data Assets underpinning models in S3, Snowflake, Databricks, Bigquery and so on.

Examples of leveraging AI-webscraping

Intelligence gathering

Building a pipeline to gather near real-time pricing information using Nimble and Orchestra takes minutes. You can read more about how to implement an Intelligence Gathering pipeline here.

Intelligence gathering can be used to scrape data from websites with reviews for sentiment analysis, or from public websites. This data can then be enriched and aggregated over time to product valuable data products.

Real-time pricing information / Competitive Analytics

Nimble’s eCommerce API focusses on parsing data specifically from e-Commerce-style websites. These are unique due to the generally common HTML structure of having cards with images, prices, descriptions, SKU numbers and so-on.

Companies in the retail or e-Commerce spaces can leverage NImble and Orchestra to instantly gather real-time pricing data from the market in order to identify opportunities and trends. This represents enormous opportunities for maximising revenue in real-time during periods of high traffic such as Black Friday.

While the e-commerce industry has extremely sophisticated tools for analysing internal data and web traffic, the extent to which these make use of external data is much lower. This changes now with the advent of AI-powered webscraping and enterprise grade, near real-time data orchestration.

Conclusion

We’re really excited to be working with the team at Nimble and we cannot wait to see what you build.

At Orchestra, our mission is to make Data Teams’ lives easier and to help organisations drive value from their data. The Orchestra and Nimble integration represents the easiest possible way to achieve near real-time data from any internet data source with an unprecedented level of accuracy.

By leveraging Artificial Intelligence, we are also helping companies in traditional sectors like retail, logistics, and manufacturing accelerate Generative AI adoption where the value is highest.

If you’d like to learn more about Orchestra or Nimble or read more about our use-cases, please get in touch or find more resources below!

Orchestra

Orchestra + Nimble demo 📹 link

Orchestra 🌐 link

Orchestra Free Tier 🔗link

Nimble

Nimble🌐 link

Nimble Free Trial 🔗link

Find out more about Orchestra

Orchestra is a platform for getting the most value out of your data as humanely possible. It’s also a feature-rich orchestration tool, and can solve for multiple use-cases and solutions. Our docs are here, but why not also check out our integrations — we manage these so you can get started with your pipelines instantly. We also have a blog, written by the Orchestra team + guest writers, and some whitepapers for more in-depth reads.