CI / CD for Data Engineering (Python)

Understanding basic CI / CD for Python code in a Data Engineering context is a must for Data Engineering Leaders

Introduction

CI / CD is super important, but getting started can be tough. This short article tells you how.

“DevOps” is getting a lot of heat right now, not just in data but in software engineering too. That’s because its separation and specialisation entail the existence of specific DevOps teams, and while this degree of specialisation makes sense for large organisations, for smaller ones where skills across the whole stack is needed, it makes software engineers woefully inept at their jobs, capable only of writing average code with no idea of how to actually get it working in Production.

In Data Engineering, DevOps can be quite easy. For example, deploying dbt via a CI/CD pipeline is well known.

However what about for some arbitrary Python or Javascript code? Do you use a linter? Do you use a python package like pytest to run unit tests? What about integration tests?

I found developing a simple github workflows file a bit more challenging than I wanted to the first time I did it, so here are some tips that will probably go out of date in a few years to save you some time!

Unit tests

A unit test tests functionality. Suppose you have a function in a data science repo that demeans some data. You should test this function is working by having a test with a test dataset and asserting the output. Something like this:

def demean_data(df):

return df - df.mean()

test_df = pd.DataFrame(...)

def test_demean_data():

demeaned = demean_data(test_df)

assert demeaned = (...)

So demean data gets tested by test_deman_data() — if the function fails the test fails. It also asserts that based on a test dataframe, what the output should be.

You can use something like pytest to check this. Note, sometimes running “pytest” doesn’t work. You need to run

python -m pytestIntegration tests

Integration tests test the connection between your application and third party APIs — this is pretty common for data engineers as we use integrations to do stuff like ingest data, reverse ELT data and make slack alerts.

An integration test looks something like this:

class SalesforceClient(HTTP):

super().__init__()

def basic_request():

return self.request(method='GET', endpoint='basic_endpoint')

base_url = ''

test_salesforce_endpoint():

salesforce_client = SalesforceClient(base_url)

response = salesforce_client.basic_request()

assert response.status_code = 200

assert response.json() = {...}Here there is a method for calling an arbitrary endpoint to an integration, in this case Salesforce. We invoke this method in a test. The test asserts the output and the status code. The test fails if the response is not what we expect.

Fast API

We should also test endpoints we use in Fast API if we set up an App Service. This is pretty easy to set up, and is a great resource if anything above isn’t clear: https://fastapi.tiangolo.com/tutorial/testing/

Bonus: Linting

Lint is the computer science term for a static code analysis tool used to flag programming errors, bugs, stylistic errors and suspicious constructs.

Which, given data engineering can be cowboy-esque, probably has a lot of value. I recommend using Pylint in VS Code: https://code.visualstudio.com/docs/python/linting

This is awesome because every time you save your file, you get blue squigglies and yellow squigglies that flag potential errors. If the errors are super annoying, even if you disabled all of them you would at least get your code auto-formatted nicely. So this is something you should definitely have.

CI / CD

Ideally, we want to:

Write code

Test and Lint our code locally

Push it onto a branch, and prior to merging the branch, run a build of the code in the merge environment (“staging”) that tests to see if our local code will work elsewhere

Step 3 requires us to write a workflows.yml file. This should look something a bit like this:

# Docs for the Azure Web Apps Deploy action: https://github.com/Azure/webapps-deploy

# More GitHub Actions for Azure: https://github.com/Azure/actions

# More info on Python, GitHub Actions, and Azure App Service: https://aka.ms/python-webapps-actions

name: Build and deploy Python app to Azure Web App - {app name}}

on:

push:

branches:

- main

workflow_dispatch:

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python version

uses: actions/setup-python@v1

with:

python-version: '3.9'

- name: Create and start virtual environment

run: |

python -m venv venv

source venv/bin/activate

- name: Log in with Azure

uses: azure/login@v1

with:

creds: '${{ secrets.AZURE_CREDENTIALS }}'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

- name: Run test

env:

ENVIRONMENT: ${{ secrets.ENVIRONMENT }}

run: |

set

python -m pytest

- run: echo "🍏 This job's status is ${{ job.status }}."

- name: Upload artifact for deployment jobs

uses: actions/upload-artifact@v2

with:

name: python-app

path: |

.

!venv/

deploy:

runs-on: ubuntu-latest

needs: build

environment:

name: 'Production'

url: ${{ steps.deploy-to-webapp.outputs.webapp-url }}

steps:

- name: Download artifact from build job

uses: actions/download-artifact@v2

with:

name: python-app

path: .

- name: 'Deploy to Azure Web App'

uses: azure/webapps-deploy@v2

id: deploy-to-webapp

with:

app-name: 'app_name'

slot-name: 'Staging'

publish-profile: ${{ secrets.AZUREAPPSERVICE_PUBLISHPROFILE_DE07DE69075147AF950E1A0DB583CAB9 }}Stuff you should know:

Python versioning here cannot be specified to three decimal points, only for specific versions is this ok. Therefore, you will get 3.9.x from specifying 3.9 in the build. If you have 3.9.0 and get 3.9.17 via the build, then this could cause errors

We build on Linux. I develop on Windows. This leads to errors

If you need to authenticate to Azure, to use the DefaultCredential() method, you can’t use environment variables like you can locally. You need to follow this and then get a federated credential for your app like this

If you’re configuring AWS, you need to login via AWS using the above

Github also has environment variables. These are found in settings (see screenshot below)

Setting an environment variable via the “env” line seems to only apply for the given step, unless you put it at the top of the hierarchy. You can run a “set” command to see what environment variables get set.

This yields:

Where the key step is Run test, that’s just run all of our Unit tests and Integration tests (ideally these are done in two separate stages).

The build fails if the tests fail, so you never push code with broken tests — win!

Bonus

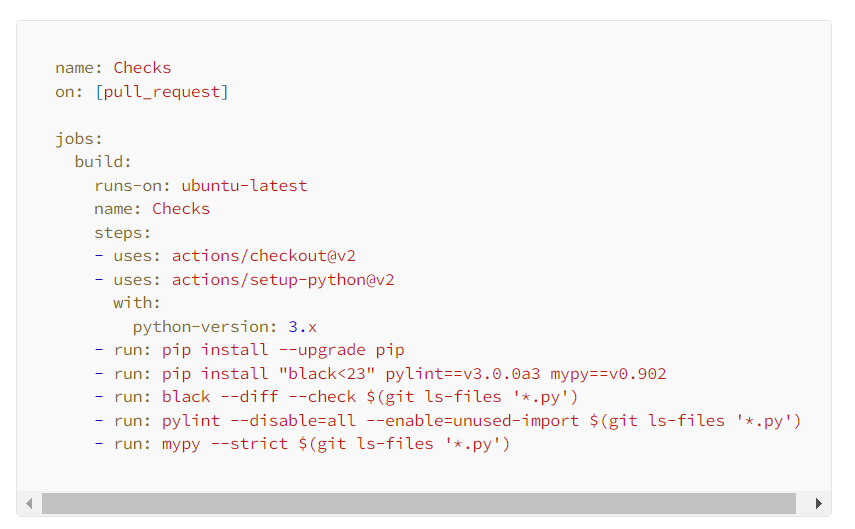

Add the pylint step — see here and below

name: Checks

on: [pull_request]

jobs:

build:

runs-on: ubuntu-latest

name: Checks

steps:

- uses: actions/checkout@v2

- uses: actions/setup-python@v2

with:

python-version: 3.x

- run: pip install --upgrade pip

- run: pip install "black<23" pylint==v3.0.0a3 mypy==v0.902

- run: black --diff --check $(git ls-files '*.py')

- run: pylint --disable=all --enable=unused-import $(git ls-files '*.py')

- run: mypy --strict $(git ls-files '*.py')Make the Build apply as a merge approval check rather than just running on merge.

Conclusion

Having stuff like this would have saved so much time in my prior job. I definitely would have started out with the ability to do this from day 1, but perhaps not by writing code for absolutely everything (data engineers are focused on business value, after all). I think there is a lot of value in integration tests (particularly because we need them so much) and in unit tests; ensuring the validity of data and responses getting pushed out is a really easy way to ensure apps are working and I’ve lost count of the times and types of error that would’ve been caught by this (article part two to come).