Column level lineage is out: AI is in

Why column-level lineage will rapidly be replaced by artificial intelligence

Introduction

Column-level lineage and lineage tools in general seemed to take the data world by storm in 2021. They offer the promise of being able to trace dependencies across “assets” — persistent objects in your data ecosystem like sets of files, tables in a warehouse, or dashboards.

The idea was that having this layer of information would help data teams rapidly debug data release pipelines when they broke, and would also help data teams understand the impact of changes to downstream processes that could result from code changes. There have been even more ambitious projects like SQLMesh (and opinionated, note the description and the emphasis on “actually” in “SQLMesh actually understands your SQL”). Most lineage tools also painstakingly calculate column-level lineage, which allows you to trace a metric all the way through your project.

In this article, I’ll teach you how you can rapidly debug your pipelines without using column-level lineage.

The motivating problem

Some Data Teams have the following problems:

They build some data in a table, somewhere

They test the data

The test fails

They don’t understand what caused the test to fail

For example, suppose you expect total sales in stores to be greater than $1000 every day. One day, you get sales of $0. This is very strange. You look at your test, and see it’s testing a column called sales, and the values are all fine. Why is the test failing?

The test is failing because you group by store and select where store is not null. On this occasion, store is null. And your average sales by store is 0. With column level lineage, you see average sales by store depends not only on salesbut also on store.

You are able to debug this quickly with column-level lineage.

A solution with AI

Of course, column level lineage isn’t the answer really, is it.

Something I was taught was that when you have a problem, or pose someone poses you a question, you should try to understand what the real reason behind the problem or question is.

If I say “I’d like to go home now”, it’s not because I’m filled with a desire to go home. If it’s me, I’m probably trying to communicate that I am sick of walking, or I’m hungry. “What you’re saying is, you’re hungry, isn’t it Hugo?”

When we see a test break. We say “oh no my test has broken”.

We don’t mean “Shit please give me column level lineage”.

We mean to say “I want to know exactly why my test broke”. Sometimes we also want to know exactly what to do to fix it. Sometimes we want a PR raised automatically (but not always).

Now — this seems like something Chat GPT would answer pretty well if you ask me. We can specify the thing we are asking in natural language very easily. And we want an actionable, verbal response.

So let’s experiment…

Experiments with GPT

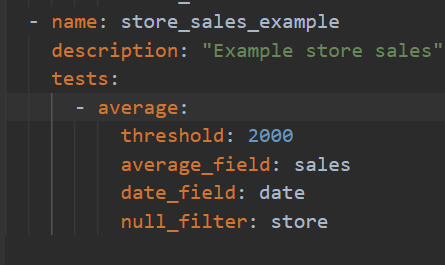

We can experiment with GPT by modelling the store and score example from the previous section using SQL and the popular data quality testing framework, dbt test.

Here is my test:

{% test average(model, average_field, date_field, null_filter, threshold) %}

WITH averages AS (

SELECT

{{date_field}} date_,

{{null_filter}} store,

avg({{average_field}}) avg_

FROM {{model}}

group by 1,2

)

SELECT *

FROM averages

WHERE avg_ > {{threshold}} or avg_ is null

{% endtest %}Here is the data:

I test to ensure store-level sales never goes over 2000

And because I engineered the raw data to fail, I get a failure in dbt

This says that I have a store with more than 2k sales, so I know immediately what the problem is at a high level. I do not know what is causing the problem.

There are two important pieces of information you have access to but dbt doesn’t. One is the test information from the dbt artifact (which is stored somewhere, but not really used by dbt). The other is the (in this case) Snowflake metadata. You could also fetch some of this data from the compiled part of the dbt repo, for which you would need a Github Connection.

I send these pieces of information with a prompt to Chat GPT, explaining that I ran a dbt test:

Prompt

I ran this query as a dbt test:

WITH averages AS (

SELECT

date date_,

store store,

avg(sales) avg_

FROM SNOWFLAKE_WORKING.PUBLIC_clean.store_sales_example

group by 1,2

)

SELECT *

FROM averages

WHERE avg_ > 2000 or avg_ is null

and I used this SQL to generate the table:

create or replace table SNOWFLAKE_WORKING.PUBLIC_clean.store_sales_example

as

(with base as (

select

case when date = '05-Nov-2023' then null else store end as store,

date,

sales

from SNOWFLAKE_WORKING.PUBLIC.store_sales

)

select

store,

date,

sum(sales) sales

from base

group by 1,2

);

But the test was failing. The test checks to see if average sales in a given store is more than 2000 on a given day and fails if so. Why did it fail?And Open AI responds with the below:

The test you’ve described aims to calculate the average sales for each store on a given day and check if the average sales exceed 2000. Based on the SQL provided, there might be a reason why the test is failing:

In the SQL script used to generate the table SNOWFLAKE_WORKING.PUBLIC_clean.store_sales_example, you have a condition within the CTE named base:

case when date = '05-Nov-2023' then null else store end as storeThis condition appears to filter out the store data when the date is ‘05-Nov-2023’. When computing the averages in the subsequent query:

WITH averages AS (

SELECT

date date_,

store store,

avg(sales) avg_

FROM SNOWFLAKE_WORKING.PUBLIC_clean.store_sales_example

GROUP BY 1, 2

)

SELECT *

FROM averages

WHERE avg_ > 2000 OR avg_ IS NULL;The filter within the CTE base might be causing the exclusion of data for the date '05-Nov-2023', which in turn might be leading to missing values (NULL) in the avg_ column for some stores on that date.

As a result, the test could be failing because it’s evaluating stores on dates where data has been filtered out, leading to NULL values in the computed averages.

To resolve this issue, you might want to review the conditional logic used in the CTE base to ensure it's not inadvertently excluding data needed for the test calculation on '05-Nov-2023'. Adjusting the conditions there could help ensure that all necessary data is included for the test to accurately calculate average sales and pass the condition of being greater than 2000 for specific stores on that date.

Holy shit. 10/10.

Conclusion

Now of course, in this example Chat GPT detects the error because I engineered it. The error is in the code. If the error was caused by the data, you would need to send GPT the metadata (stuff like nulls by column etc) or you would need a service that does some checking using GPT via a flowchart.

Furthermore, if the error was deeply nested you’d potentially need to feed it your whole repository for it to understand the dependencies.

But that’s not really that hard to do. Databricks’ AI assistant is just that — a distributed SAAS version of an AI assistant trained on your repository. It’s the same thing as Github Copilot, which of course has short-term memory and gets to know your programming quirks.

The point this illustrates is using GPT models to tell you what went wrong is quick, and extremely accurate.

And to my point earlier, it gets at the heart of the question — I asked: why is my test failing? It wasn’t apparent from dbt’s test output, nor from the column-level lineage. Open AI explained to me exactly what was going wrong, and where. It told me how to fix it. And it’s probably working on PR’ing a solution as we speak.

So to conclude, I believe services with metadata and operations will begin to embed themselves in workflows to give data engineers and analytics engineers full visibility on how to fix errors. Rather than rely on architecturally cumbersome tools like open lineage for debugging, platforms with access to operational metadata will instead provide the crucial service of debugging using embedded GPT models trained on internal codebases. Column-level lineage, IMO one of the biggest data fads of the tech boom, is out: AI is in. Merry Christmas🎄