Data Leadership #4 knowing when to rip out your infrastructure

Working out the needs of an organisation is a journey. Sometimes it is just best to start afresh.

Punch something and start again. Credit to Barakamon.

Introduction

I’ve written before about a data hierarchy of needs, borrowed from Mazlow, and this article is really for those of you who aren’t hitting that hierarchy. To briefly recap, the hierarchy moves like so, with the most base needs living at the bottom of the pyramid.

In this article, I’m going to speak about three scenarios where you’ll benefit from moving or switching our part of your stack or data operation. I believe these scenarios are all too common, so hopefully, these are relatable.

The relevance of the hierarchy is to understand stuff we should prioritise. I bet you don’t have all of your data apps network segregated. I bet your BI tool is full of stuff people don’t use. What’s more, I bet you over-compute in your cloud data warehouse. We can examine a few of these in the following scenarios.

Case 1: what’s security?

Something I learned at Codat was that Software Engineers are obsessed with exposure. Not of themselves, of course, but of software assets they manage. This could be anything from an API endpoint to an endpoint for an object in blob storage.

This is because exposing resources to the internet increaes the risk of an attacker compromising a software system. For example, if I maintain an API but there’s a public endpoint that’s available to anyone via the internet, then if someone finds it, they could DDOS the API, thus bringing it down and compromising the service.

One way to mitigate this is to place every owned by a software team in a private network or behind an API Gateway. Keeping assets within a private network and maintaining good security policies on the access point to the network (in this case an API Gateway) is enough to ensure that the attack surface area, and hence the probability sensitive data exits the system, is minimised.

This relates to data in an almost fundamental way, because data architecture is an extension of software architecture. Can you see what’s wrong with the below?

In this example, although the software org is “locked down” (everything is protected behind an API Gateway) there is a leaky bucket! The Data Team have their own data lake, their own cloud data warehouse, and what’s more, they haven’t locked down their services and they’re all exposed to the internet.

Indeed, some of this is unavoidable; 3rd party SAAS apps will always open their APIs up to the internet. The task for Data Teams is then to ensure that secrets are kept secure. However what about if a Data Team maintains a data lake? Is that secured in the network? What about if data is being piped to places that are accessible to anyone e.g. a Google Sheet?

How to solve

Fortunately, this is quite an easy one to solve, at least in theory. 70% of this problem is simply being aware it exists. For home-grown structures like data lakes, hosted repositories e.g. orchestration packages and the like, these can all be included in the network in much the same way as other pieces of infrastructure.

As for third party SAAS Apps, the best thing to do is implement robust process and perhaps pay for enterprise security if you can. For example, ensuring tokens get rotated is good practice. Implementing Role-based access control can’t hurt. Neither can intermittently doing access reviews.

There are also some enterprise features (mainly private link) that allow you to ringfence certain endpoints to only be accessed from within the private network you’ve already got set up. This is the best thing to do, but is fairly sophisticated from the security perspective.

Key takeaway: SHIFT LEFT ON SECURITY and potentially rip out some stuff you don’t need if you have no hope of network segregating it.

Case 2: no-one does BI anymore

Self-service analytics is a great thing. It’s also not a new thing, but has very much been bandied around newer technologies and is the fundamental goal of data mesh.

Perhaps it’s telling that one of Alteryx’s slogans reads as “Analytics for All”:

Enabling analytics at scale for the masses is hard, and there are probably errors you made when you tried to set this up. I certainly made a lot the first time, including but not limited to:

Failing to establish rigorous data quality principles

Being selective with what data and use-cases we decided to support

Rolling out self-serve analytics too fast

Which of course, lead to immense distrust in data not to mention hundreds of stale dashboards and many dissatisfied stakeholders. This is not a good result — while I could point to growing Looker usage, there were times where I felt as if it was driven as much by growing headcount and core usage of a few good dashboards rather than be an indication of the efficacy of our self-serve system. Fortunately, there is a solution.

How to solve

Depending on what BI tool you have, it may be good to actually just start again. Sure, the LookML semantic layer is nice, but there are so many new semantic layers you might as well try a new one. Not to mention that Looker is expensive — there are some other really good and reasonable offers like self-hosting Mode or Astrato.

The biggest way to solve this however is to do a really really critical evaluation of what data use-casse you’re supporting. How many people use that marketing data table you built? Is that financial dataset the sales leadership want to rely on really of sufficient data quality? Who is using the product analytics dashboard?

These are all questions you should ask yourself. You may find that if you have poor uptake, it can be worth ripping out your BI tool, perhaps turning off and deleting a lot of dbt, and simply starting again. Less is more.

Case 3: your Snowflake bill is what?

Many people have written extensively about why cloud bills get so high. However rather than explain this away solely through understanding data practitioners of some elaborate VC and influencer marketing scheme, I believe Snowflake costs can be explained through an architectural lens.

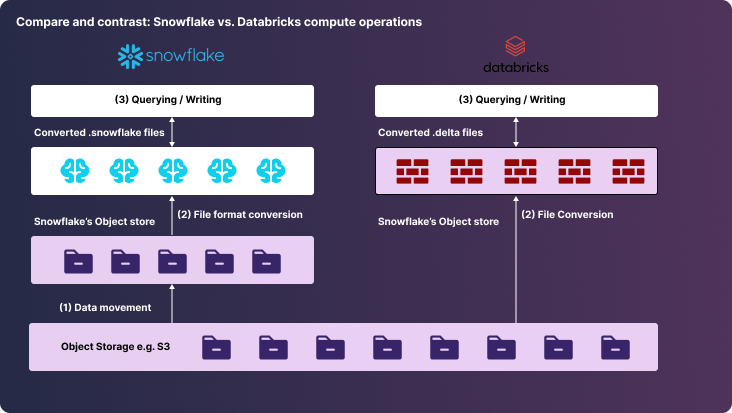

With Snowflake, on the compute side, you pay for (1) data movement from your object store to Snowflake (if you have a data lake). You pay (2) to convert to their file format. You pay (3) to query i.e. to read and write data. You pay twice for storage in this scenario (the pink boxes).

Under a data lake architecture like Databricks, you pay twice for storage as well because although you don’t need another object store, you still need to store the .delta files somewhere. You don’t pay for data movement really as everything’s in your data lake, but you do also pay for file conversion and for querying (2 and 3).

I’ve written extensively about Data Lakes in the Data Leadership Journal and this is the reason in my eyes, Cloud Warehouse bills can get high — cloud warehouses force you to move data from your cloud storage to a cloud providers’ via the internet, and that costs lots of money. Furthermore, the incentives to move raw data in create a lot of cost due to ingress charges. A final point worth noting is that optimising cloud warehouses is not a homogenous exercise. It’s different for different warehouses.

Time to remove the warehouse?

How to solve

A Databricks extremist would argue yes. Get off Snowflake and just use Databricks.

However as we’ve shown before, it’s not that simple. DBX is also full of cost-optimisation quirks, it’s much harder to use for the layman, and doesn’t obviously present cost improvements. Still, though, doing everything as a spark job is definitely on approach here.

In conjunction with (2), though, re-evaluating what data gets moved to a cloud data warehouse is important. For example, it may be that even though GA4 sends data in a deeply nested format, you don’t mind it getting dumped into BigQuery because it’s so convenient.

However, perhaps you have event data you’re streaming into Snowflake. Here, you actually get millions of event per minute, there isn’t a whole lot of granular detail, and really you just want to send by-minute aggregates into Snowflake (or perhaps even by 15minute buckets!). This could be done using a streaming engine or by running spark on the raw files in the data lake. You could even use Duck DB.

The point is, as you manipulate data you rarely encounter a scenario where performing aggregate calculations is more costly than manipulating raw data due to the volumes involved. Of course there are exceptions, like running lots of window functions. However it is generally risky with respect to cost to assume you will do all your transformation in Snowflake, as you move all your raw data there first as a matter of course.

Conclusion

Turning off all your tools is probably not a wise thing to do, and indeed will be impossible if you’re anything larger than a mid-size startup. Critically re-evaluating what you’re doing and how, is a must.

We covered three scenarios that make doing this a highly beneficial exercise. These were A) where security of the entire org is compromised, B) where self-service analytics gets limited returns and C) where your cloud bill is too high.

To tie this back to the data hierarchy, I’m also going to invoke the three O’s; Orchestration, Observability and Operations. We saw that if we discount security, the first thing people build a stack for is functionality.

We’re now all in a bit of a v2 phase, where we also care about reliability and cost to maintain. Rather than focus on tools that do heavy lifting like ingestion, cloud warehouses etc. we’re interested in ways to observe out data pipelines. We want data release pipelines that are robust and efficient, but also enforce data quality — they are, after all, data release pipelines; they wouldn’t be much good if they released bad quality data into the hands of end stakeholders (?). These are obviously orchestrated, and form part of a holistic data operation. Making this easy for data teams is the goal of Orchestra.

We may find we have too much functionality which is why, as we ascend the hierarchy, we can remove some. We can also spend more time thinking about the operation and the system as a whole. Let us not forget the pinnacle of this hierarchy is simplicity. One day we may use only a single tool for everything, having set-up and ripped out many, many tools in the process. 🔧