Deploying Orchestra as a Snowflake Native Application | The future of Postgres in Snowflake

Two things to consider here that appear unrelated but arent'

Foreword

Some of the biggest pains we see facing data engineers and analytics engineers is that they spend too much time maintaining boilerplate infrastructure, and still have no visibility into pipeline failures.

It means you’re constantly fighting fires and don’t have time to focus on building. What’s worse is that the business doesn’t trust the data.

At Orchestra we’re building a Unified Control Plane for Data Ops. A Data Status page if you like, with some incredible features to give data teams their time back so they can focus on building. You can try it now, free, here.

Introduction

Last week we launched Orchestra as an App on the Snowflake Marketplace.

The idea here is to make it easier for teams from an A) ecosystem but B) billing perspective.

Why manage things in two places when you can manage them in 1? Same goes for billing? Time saved is money saved.

I recently heard from Marco Sloot at Snowflake in London who is one of the lead engineers for Crunchy Data, the Postgres start-up Snowflake acquired earlier this year.

The vision is pretty cool - to build an RDS-Scale service from within Snowflake.

Very interesting, and very appealing. But the people in the room are confused. The people in the room are data people. They already have a database, called Snowflake. They don’t need another one, or if they do, they don’t know why they need it.

It is hard at this point to see the value of a Snowflake-deployed Postgres vs. a simply put Postgres scaler on the snowflake app marketplace. It will be interesting to see if data engineers and analytics folks begin to demand separate DBs.

Of course - the idea is you build your application layer with the storage ON Snowflake. That however, will require marketing to a completely different demographic. Just as Databricks are trying to do.

Time will tell - I do not think you can convince software engineers to decouple storage and compute from the rest of the cloud ecosystem.

It may yet be even harder to convince AI.

Orchestra as a Snowflake Native App

We’re thrilled to announce that Orchestra is now available within Snowflake as a Native Application.

In addition to the core Orchestra services, this includes the Orchestra Metadata App, which we’ve custom-built for Snowflake users looking to capitalise on AI as a Snowflake Native Application.

Many companies rely on Data Catalogs, Data Lineage, and Observability tools to collect their Metadata. However these approaches are not suited for an AI-Native Architecture.

Third party tools require extensive integration with Snowflake and other tools like Orchestrators

Metadata sits externally to Snowflake, which means MCP Servers must be stood up by Data teams for lineage data to be accessible

Data is not updated in real-time, which means AI Agents suffer from latency issues

When you run Orchestra on Snowflake, the Metadata App automatically moves data from the Orchestra metadata store to Snowflake which means this metadata is available in your single source of truth (“SSOT”) in real-time.

This means agents running data workflows have ready access to metadata. Agents running on Snowflake can therefore:

Easily Fix and recommend changes when pipelines fail

Easily identify anomlies and “long-running jobs”

Diagnose the root cause of errors and produce impact analysis

For the Organisation:

✅ Unified Observability in your warehouse: all the data showing why your pipelines are failing, who owns what, and how long everything is taking and costing is automatically centralised in your warehouse

✅ Cost Savings and Efficiencies: Analyse pipeline performance, monitor costs, track dependencies, and troubleshoot with unprecedented detail, all within your familiar Snowflake environment in an automated way

✅ AI and MCP ready: The Data Team don’t need to worry about building MCP-Servers to surface data for AI Agents — it’s all in Snowflake already

✅ Native Integration and security: You’re keeping architecture lean and elegant by keeping Snowflake as your data store and running Orchestra within it.

🔗 Learn more and get started with the Orchestra Metadata App today!

📚 Check-out the docs here

Setting up the Orchestra Snowflake Native App

Head over to the listing where you can learn more about the Snowflake Native App

2. Ensure you have an Orchestra Account

3. After installing the application in Snowflake, it’s time to follow the set-up steps here and below

Set-up Steps

Install the app from the Snowflake Marketplace

Grant the External Access Integration reference when prompted

Run the setup procedure to create the API access objects

Setup

After installation, run the following to create the API access objects:

-- Create the EAI objects (run this after granting the reference)

CALL core.create_eai_objects();This procedure creates the necessary stored procedures that can access the Orchestra API using the configured External Access Integration.

Usage

Fetch Metadata

The app provides three main procedures to fetch data from the Orchestra API:

-- Get pipeline runs (defaults to page 1, 100 results per page)

SELECT core.get_pipeline_runs();-- Get task runs (defaults to page 1, 100 results per page)

SELECT core.get_task_runs();

-- Get operations (defaults to page 1, 100 results per page)

SELECT core.get_operations();You can also specify custom pagination:

-- Get pipeline runs with custom pagination

SELECT core.get_pipeline_runs(2, 50); -- page 2, 50 results per pageLoad Data into Tables

The app automatically creates the following tables in the public schema:

pipeline_runs- Stores pipeline run metadatatask_runs- Stores task run metadataoperations- Stores operation metadata

To load data into these tables:

-- Load pipeline runs data (inserts new or updates existing)

CALL core.load_pipeline_runs();-- Load task runs data (inserts new or updates existing)

CALL core.load_task_runs();

-- Load operations data (inserts new or updates existing)

CALL core.load_operations();Each procedure will:

Fetch the latest data from the Orchestra API

Transform the data to match the table schema

Use MERGE operations to handle existing records:

New records: Inserted into the table

Existing records: Updated with latest data from the AP

Return a success message with the number of records processed

Note: These procedures are idempotent — you can run them multiple times safely without creating duplicates or errors.

Extract Specific Fields

-- Extract specific fields from pipeline runs

SELECT

value:id::STRING as pipeline_run_id,

value:pipelineId::STRING as pipeline_id,

value:runStatus::STRING as status,

value:startedAt::TIMESTAMP_NTZ as started_at

FROM TABLE(FLATTEN(input => core.get_pipeline_runs():results));Query the Loaded Data

Once data is loaded into tables, you can query it directly:

-- Query pipeline runs

SELECT * FROM public.pipeline_runs ORDER BY created_at DESC;-- Query task runs with status filter

SELECT * FROM public.task_runs WHERE status = 'SUCCESS';

-- Query operations for a specific pipeline run

SELECT * FROM public.operations WHERE pipeline_run_id = 'your-pipeline-run-id';Security

API keys are handled securely through Snowflake secrets

All API calls use HTTPS

Network access is restricted to

app.getorchestra.ioProper error handling for failed API calls

External Access Integration ensures secure external API access

Error Handling

The procedures return error information if API calls fail:

-- Check for errors in API response

SELECT

CASE

WHEN result:error IS NOT NULL THEN 'Error: ' || result:error::STRING

ELSE 'Success'

END as status

FROM (SELECT core.get_pipeline_runs() as result);Table Schemas

Pipeline Runs Table

id- Unique pipeline run identifier (PRIMARY KEY, NOT NULL, UNIQUE)pipeline_id- Pipeline identifierpipeline_name- Name of the pipelineaccount_id- Account identifierenv_id- Environment identifierenv_name- Environment namerun_status- Status of the pipeline runmessage- Status messagecreated_at- Creation timestampupdated_at- Last update timestampcompleted_at- Completion timestampstarted_at- Start timestampbranch- Git branchcommit- Git commit hashpipeline_version_number- Pipeline versionloaded_at- When the record was loaded into Snowflake

Use-case: identify long-running pipelines and see why:

Task Runs Table

id- Unique task run identifier (PRIMARY KEY, NOT NULL, UNIQUE)pipeline_run_id- Associated pipeline runtask_name- Name of the tasktask_id- Task identifieraccount_id- Account identifierpipeline_id- Pipeline identifierintegration- Integration typeintegration_job- Integration job namestatus- Task statusmessage- Status messageexternal_status- External system statusexternal_message- External system messageplatform_link- Link to external platformtask_parameters- Task parameters (VARIANT)run_parameters- Run parameters (VARIANT)connection_id- Connection identifiernumber_of_attempts- Number of execution attemptscreated_at- Creation timestampupdated_at- Last update timestampcompleted_at- Completion timestampstarted_at- Start timestamploaded_at- When the record was loaded into Snowflake

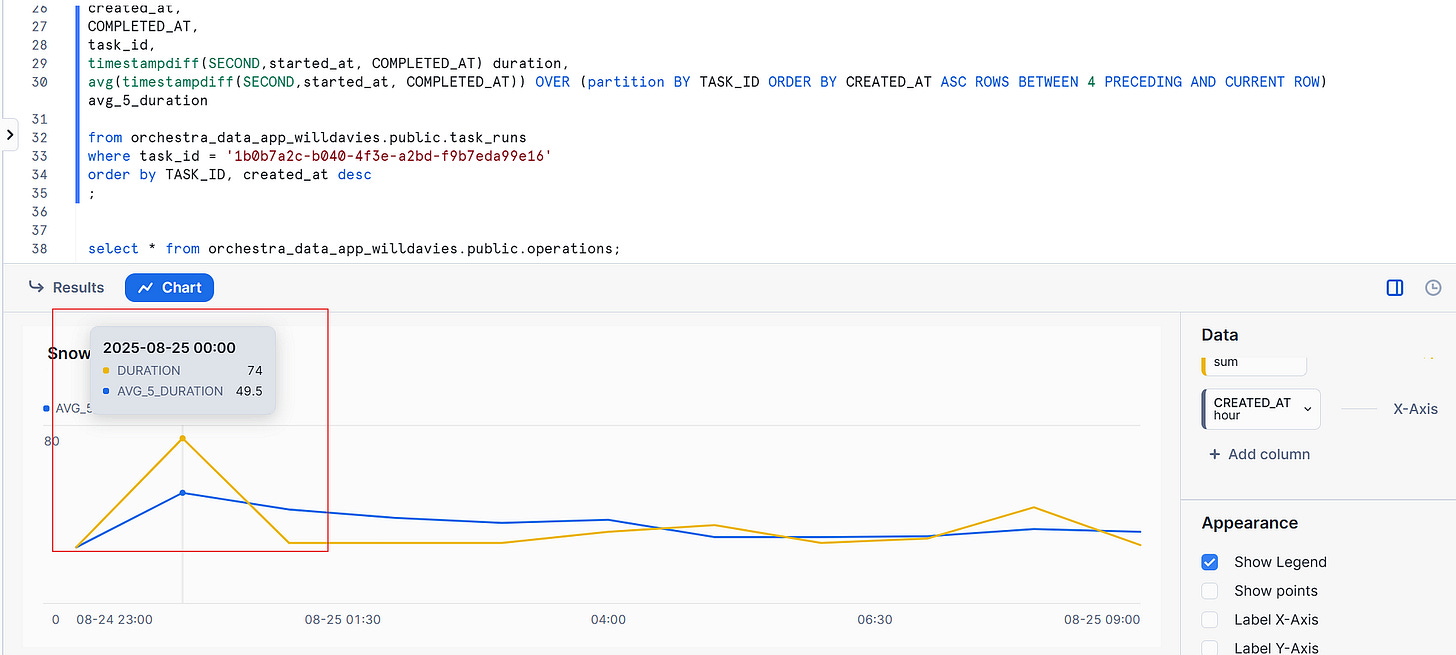

Use-Case: identify long-running task runs

Operations Table

id- Unique operation identifier (PRIMARY KEY, NOT NULL, UNIQUE)account_id- Account identifierpipeline_run_id- Associated pipeline runtask_run_id- Associated task runinserted_at- Insertion timestampmessage- Operation messageoperation_name- Name of the operationoperation_status- Operation statusoperation_type- Type of operationexternal_status- External system statusexternal_detail- External system detailsexternal_id- External system identifierintegration- Integration typeintegration_job- Integration job namestarted_at- Start timestampcompleted_at- Completion timestampdependencies- Operation dependencies (VARIANT)operation_duration- Duration in secondsrows_affected- Number of rows affectedloaded_at- When the record was loaded into Snowflake

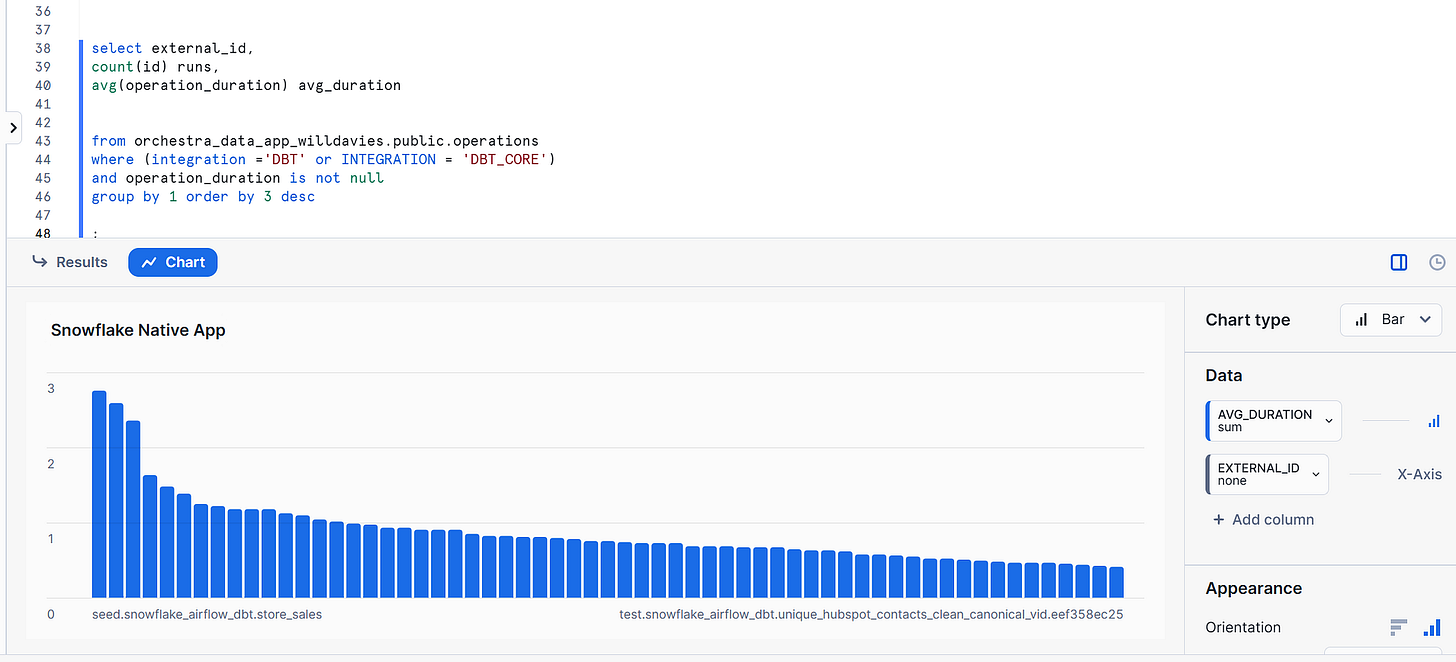

Use-case: find the distribution of cost/time spent for different dbt models and tests:

Support

For support, please contact:

Email: support@getorchestra.io

Rest API Documentation: https://docs.getorchestra.io/docs/metadata-api/overview

Find out more about Orchestra

Orchestra is a unified control plane for Data and AI Operations.

We help Data Teams spend less time maintaining infrastructure, make them proactive instead of reactive, and ultimately win trust in data and AI from the Business

We do this by consolidating Orchestration with monitoring, data quality testing, and data discovery. You don’t need an observability, lineage, catalog etc. with Orchestra.

Check out