How I use Gen AI as a Data Engineer

Generative AI is all the rage. In this article we dive into some practical examples for Data Engineers

About me

I’m Hugo Lu — I started my career working in M&A in London before moving to JUUL and falling into data engineering. After a brief stint back in finance, I headed up the Data function at London-based Fintech Codat. I’m now CEO at Orchestra, which is a data release pipeline tool that helps Data Teams release data into production reliably and efficiently 🚀

Also check out our Substack and our internal blog ⭐️

Introduction

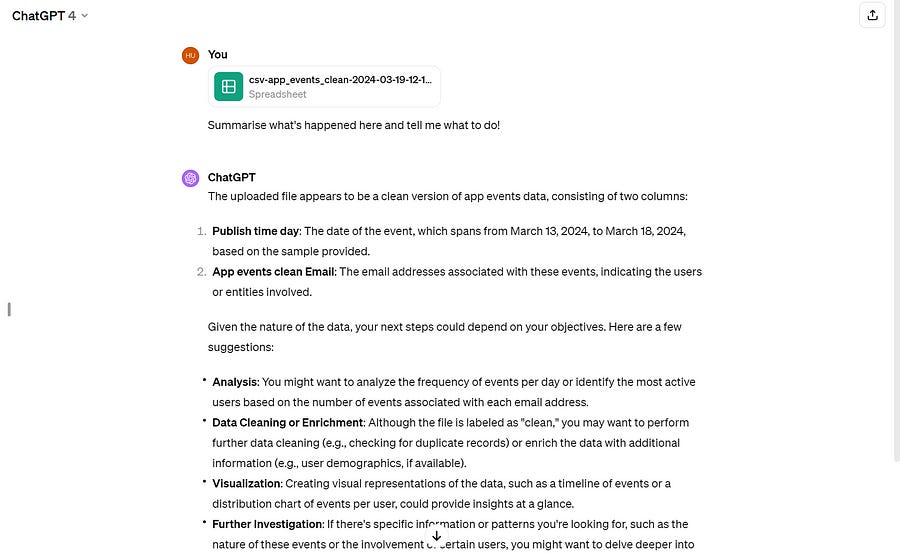

Embedding Generative AI within Data Engineering Workflows and Data Pipelines is actually extremely straightforward and gratifying.

As a bridge between software and business users, Data Teams are in an unrivaled position to quickly iterate on Generative AI use-cases that have important impacts on the business.

Specifically, Generative AI can be used to summarise vast quantities of both structured and unstructured information, which both expand the breadth of data available to data teams, while make the depth of that data greater as well.

However — it’s easy to get carried away with Generative AI and how “cool” or “trendy” it is without actually using it to drive impactful growth within an organisation. That’s why having a central point of visibility for Data and AI Products is so fundamental for data Teams.

In this article, we’ll discuss a few ways you can leverage generative AI within existing Data Pipelines and how to quantify the results.

Feature Engineering

By ingesting large quantities of unstructured data such as call notes or support ticket requests, data teams can now make an API Call and fetch data and clean it.

This could be done at the point of ingestion or mid-pipeline. For example, if you’re ingesting data from Salesforce using open-source connectors, you can iterate through a “Notes” column and make a call to Open AI to summarise the notes. This is a form of feature engineering using Generative AI — see the very basic code sketch below.

def fetch_data():

retrun pd.DataFrame(['some_data'], columns=['notes'])

def make_call_to_open_ai(data):

data['completed_notes'] = open_ai.make_request(data['notes'])

return data

data = fetch_data()

feature_engineered_data = make_call_to_open_ai(data)You could also submit an array of values using a Python script as an intermediate step within a data transformation.

This would require you to co-ordinate Python jobs and perhaps Data Transformation jobs (queries, using dbt or Coalesce) which is hard (unless of course you have a versatile platform that handles orchestration).

Finally, many of the cloud warehouses are also embedding generative AI into their products. For example, in Snowflake, Snowflake SQL supports functions such as SUMMARIZE() which does the above mentioned work itself under the hood.

New data sources — unstructured data

If you can arrange for pdfs, documents and emails to land in an object storage layer such as S3, then you can now make use of this data.

For example, you could use a data ingestion tool to sync emails to S3 (as you might use a Fivetran to get data from Salesforce to Snowflake). You can then use Snowflake’s pdf summariser tool to understand more about the data you’ve received.

For example, say you had a list of customer contracts stored under a filepath like /contracts/region/format/name you could pass this information to Document AI which allows it to glean this information automatically.

This would be extremely powerful for the analysis of customer contracts and order forms. You could easily infer a schema like

{

"contract_type" : "annual",

"products" :["Platform", "Dashboard"],

"platform_fees" : ["$10,000", "$20,000"]

,...

}This erases the need for complicated integrations between operational tools like Docusign and Salesforce. At best, it erases hundreds of hours of manual work for the finance team — this is an instant, quantifiable win (“This saved me 10 hours a week = $500 p.w. = $25,000 per year).

These represent net new sources of data and are therefore easily quantifiable due to their logical separation from other data pipelines. This can be extremely challenging to monitor when taking a monolithic approach by using an open-source workflow orchestration tool.

Webscraping

Teams can use platforms like Orchestra and Nimble to specify the Internet as a datasource. Yes — the internet. This is because generative AI is extremely good at understanding the important information from web files. Remember — tools like Selenium and Beautiful Soup were founded in 2006 and 2004 respectively.

Imagine if you could turn Linkedin into a data source. Imagine if you could monitor the frequency of different search terms that were mentioned in real-time without having to pay for SemRush and constantly check it.

This is what Webscraping and AI can do.

It’s also worth noting that as Webscraping jobs are typically low-compute but long-running, it’s ill-advised to run these on an expensive cluster of computers like one you might use for deploying an open-source workflow orchestration tool as it’s unnecessarily expensive. A good solution here is to use an Orchestration layer and put webscraping scripts into a cluster where you can pre-provision nodes such as EC2.

As these represent new data sources, monitoring features like cost and usage are straightforward since these Data Products can be easily internalised into single data pipelines and standalone data workflows.

Optimising Business Processes

The ability to summarise notes but also structured data sources effectively opens up a whole range of operational data pipelines.

For example, you could take a list of recent activities and use these as the over-arching prompt for an AI Agent. You could then iterate through account executives, business development representatives or another functional role and use the AI to summarise a list of things they should be doing (with canonical links to the relevant resources).

The ability to easily distill sales prioritisation from vast quantities of structured and unstructured data is inherently tricky. LLMs are adept at analysing unstructured data and swiftly accelerate time to insight. The Data Team are in a prime position to do this.

Furthermore, it is relatively straightforward to monitor. By triggering an LLM with an array of values, Data Teams triggering batch inference jobs for operational purposes can monitor usage of the service over time.

Generative AI initiatives are frequently met with skepticism, so being able to demonstrate usage of the “Data Product” is crucial. A layer gathering metadata and surfacing it to non-technical, business stakeholders will be important.

Summary

In this short article we covered four ways Data Teams can quickly test Data and AI Products in their organisation:

1. Feature Engineering

2. Unstructured data

3. Webscraping

4. Optimising Business Processes

The extent to which these will be successful will depend heavily on existing processes in an organisation.

If there aren’t existing relationships between business stakeholders and the Data Team, unleashing Generative AI on an organisation is unlikely to work. Data Teams need to be internal advocates, champions, and market themselves to other members of the business like a start-up markets itself to the world.

Other examples such as feature engineering are likely to have a very small impact. Having an additional column in a table that is a clear and concise summary of all the other columns is unlikely to “move the needle” in any regard.

Use-cases (2) and (3) are likely to be incredibly impactful, but only where existing relationships with stakeholders exist. If Finance Teams are happy keying in Contract values into an ERP system, then they are unlikely to react well to a suggestion this work is automated for them. A CFO or Financial Controller may think differently.

Fundamental to all of this is a bridge between the Data Team and the C-Suite. Data and AI initiatives represent an investment of time which could be spent elsewhere.

Data Teams should ensure that they are using a platform that can aggregate metadata from their Data and AI products such as cost, usage, and performance to facilitate a discussion with C-level executives where Data Teams can showcase the Business Value of their Data and AI Products.

Hope you enjoyed this article! If you’ve get any bright ideas about how else Data Teams can leverage Generative AI, let us know in the comments! 💡

Find out more about Orchestra

Orchestra is a platform for getting the most value out of your data as humanely possible. It’s also a feature-rich orchestration tool, and can solve for multiple use-cases and solutions. Our docs are here, but why not also check out our integrations — we manage these so you can get started with your pipelines instantly. We also have a blog, written by the Orchestra team + guest writers, and some whitepapers for more in-depth reads.