Should your Orchestration Tool be able to run Python?

Probably

Substack Note

We often have folks asking us where we see Orchestra headed. It’s a fair question, I suppose, given that every data company is having to expand to survive.

One area companies seem to expand into is data ingestion. Having built connectors in the past, I am adamant that Orchestra will not become a data ingestion company - the code is very boring to write, and there are simply, too many connectors.

That said, given all workflow orchestrators today allow you to execute python a question we often receive is why Orchestra “can’t just run my python”.

The guiding principle of Orchestra is a modular architecture. You should have python running where it runs best, and we’ll trigger and monitor that [EC2 instance] vs. saying “yeah I want to run all your code”.

So why does Orchestra now support Python? Well mainly because it just makes things more convenient. What if you want to use Python to run a little script that just checks something? What if running Python in AWS requires you to spend 4 weeks bothering another platform team? What if the data ingestion is actually very simple and lightweight?

Which leads me to the conclusion of “Probably” yes, your orchestrator should be able to execute some arbitrary code. But not for moving data en-masse, but for utility scripts that are conveniently located in the same place as your orchestration code. Like in the purist sense I would say “no” because an orchestrator / metadata framework should be really stupid, but pragmatically this is a feature pretty much everyone I speak to has asked for!

That said, I still believe moving data en-masse should be done using a standardised framework or tool to get the benefits of scale. This enables a modular architecture which is more robust because data ingestion processes and orchestration/monitoring are independent.

If you’re running a Data Team, think very carefully about where you are going to run your data ingestion. Data ingestion / movement can be slow and expensive, so you normally want it to run independently, in a scalable environment, and probably in-part on your own infrastructure to minimise costs.

Anyway, if you have some python code lying around, we’d love to get your feedback on the integration. You can try it out here.

Introduction

Today we are extremely excited to announce that Orchestra supports lightweight python execution.

One of the challenges data engineers and analysts face is managing the infrastructure required to reliably and efficiently execute python-based workloads in the cloud. Even after deployment, getting visibility and orchestrating these processes is even harder.

Orchestra supports:

Virtual machines (Azure, AWS EC2)

Elastic container services (AWS ECS, google cloud run)

Serverless functions (AWS Lambda, Azure Functions, Google cloud Functions)

Utility frameworks (GitHub actions, Snowpark, Databricks workbooks)

Erbitrarily located python via the Python SDK — read more about our integrations here.

🎊 For lightweight python execution, maintaining any of the above is no longer necessary for data teams using Orchestra 🎊

Users can simply connect Orchestra to a git repository, and run python based workloads on demand. The benefits of Orchestra’s python integration include:

scales horizontally

support for both parameters and secrets

full logging and visibility

allows the daisy chaining of python tasks with other Tasks like ELT jobs using Fivetran, Airbyte, Talend and so on / transformations using Coalesce, dbt Cloud or dbt-core (also from within Orchestra).

We hope this will significantly lower the barrier for the DevOps skills required to leverage python in end-to-end ELT workflows while retaining guardrails and best practices via Git.

The proliferation of data both in the cloud and on-premise mean using a single ELT framework is rarely possible in practice — this necessitates the need for connective tissue between components of the stack.

Orchestra is that connective tissue. By leveraging Orchestra with native python support, data teams can pick and choose the best tool for the job — like using Fivetran for SAAS ingestion, ADF for database replication, and python for hard-to-reach lightweight integrations.

Conclusion

At Orchestra our mission is to help Data Teams be successful by spending time driving Data and AI adoption instead of maintaining boilerplate infrastructure.

A piece of feedback we have consistently received is that Python is often a required component for different tasks. Not just for moving data, but for sending notifications, running little clean-up tasks, and just as an all-round helpful swiss army knife.

By supporting lightweight python execution, Orchestra completes its first functional iteration by now supporting dbt-core, python, as well as adapters for over 100 integrations to commonly used pieces of data and cloud infrastructure.

Learn more below

📗Read the docs

Use-cases for running Python in Orchestra

Save on ingestion costs by moving ELT from hosted services to a own-built python framework using dlt.

Easily alert stakeholders and send custom alerts when data quality tests succeed.

Tutorial: getting set-up in Orchestra with Python

Step 1: set-up a repository with your python code

You should ensure this is set-up with CI/CD and additional environments, as your organisation requires it.

Step 2: provision a personal access token with read access to contents

any additional permissions you might want to add:

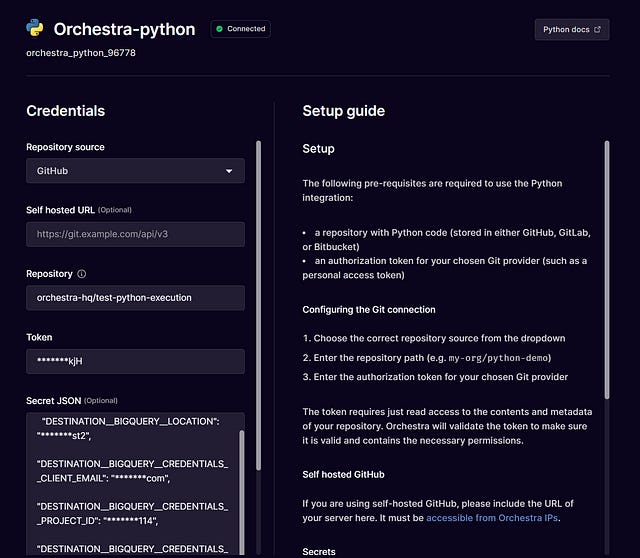

Step 3: create the integration in Orchestra

Try it here -> integrations -> connection

Step 4: Create an end-to-end pipeline

Head over to the “pipelines” page and create a new pipeline with a Python Task.

The classic pattern here would be to run pip install -r requirements.txt in the “Build command” and then to run python scripts in the “Command” field e.g. python -m ingest_data.py .

Step 5: add additional Tasks

One of the main benefits of Orchestra is that you can leverage it to run python alongside different other tasks

This flow allows you to monitor, run and debug failed runs from a single place:

And that’s it! Just like that you have Python running powerfully and scalably in Orchestra.

Find out more about Orchestra

Orchestra is a unified control plane for Data and AI Operations.

We help Data Teams spend less time maintaining infrastructure, make them proactive instead of reactive, and ultimately win trust in data and AI from the Business

We do this by consolidating Orchestration with monitoring, data quality testing, and data discovery. You don’t need an observability, lineage, catalog etc. with Orchestra.

Check out

Yes! A resounding yes!