Simple, Modern, and Modular: Data Stacks for scrappy Businesses

How to use ETL/ELT tools, Snowflake, dbt Cloud and Orchestra to rapidly deliver business value

About me

I’m Hugo Lu — I started my career working in M&A in London before moving to JUUL and falling into data engineering. After a brief stint back in finance, I headed up the Data function at London-based Fintech Codat. I’m now CEO at Orchestra, which is a data release pipeline tool that helps Data Teams release data into production reliably and efficiently 🚀

Check out our Substack and our internal blog ⭐️

Introduction

I wrote an article series a while back called “The perfect data pipeline doesn’t exist” where we dived into the ideal pipelines using the MDS, Databricks, Azure and others.

The motivation was simple: assume organisations are constrained by their cloud provider / main data software provider and work out what makes sense from there.

A new trend we see in data is that people build around core pieces of software like Databricks or Snowflake, rather than around their cloud provider. Storage layers tend to be the biggest determinants. Therefore, I’ll now be intermittently talking about some “template data stacks” that work well, depending on your profile.

In this article, we discuss a simple, modern and modular data stack designed to rapidly and reliably drive business value that will be highly applicable to a large proportion of tech and non-tech businesses.

About you, your organisation, and your data

You are:

In charge of architectural decisions at your company

Focused on driving business value through possibly transformational and cultural change

Willing to focus on a small set of initiatives that deliver the most business value

Your organisation is:

Focused on delivering results

not blessed with an infinite budget

Up to 1,500 employees

A mix of technical and non-technical data folk

Your data is:

Not too big i.e. millions not billions of rows

Not operating in a highly regulated industry i.e. subject to significant PII constraints and strict data governance policies

Not too fast — data is not being used to support sub second latency use-cases

Varied. You have streams, multiple use-cases, multiple sources, multiple stakeholders, multiple legacy technologies and so-on

Ok let’s get to it.

The solution — Snowflake and the rest follows

In order to ingest data from a wide variety of sources at different speeds across multiple stakeholders, it’s essential not to embed yourself into a single tool. Fivetran, for example, moves data well for API SaaS connectors under 1m rows, but it can get expensive after that. Airbyte is a great alternative for SaaS connectors and database replication, but might not be your best choice for highly custom or streaming use-cases. Consolidated Stream engines like Estuary are powerful, but are relatively early and can wed you to architectural decisions (e.g. RedPanda = Kafka).

Recommendations

Streaming / high volume / low latency

“Do it yourself” in your chosen cloud e.g. Azure Service Bus, bigQuery pubsub, AWS Kinesis

Database replication / CDC

Airbyte or “Do it yourself” (e.g. you can do AWS Dynamo to S3 using Kinesis too)

For on prem, it could be wise to tender some SAAS e.g. use Striim for Oracle or SQL Server

SAAS connectors / low volume / speed not required

Airbyte or Fivetran. Use Portable for long-tail. Buy connectors all day long — they’re commoditised and therefore CHEAP

For long-tail, build your own and host in ECS, EC2, EKS, GKS. This has the added bonus of giving your engineering team the infrastructure they need to execute arbitrary commands, should they need to

Transformations

If speed is of the essence and you are on Snowflake, then Coalesce.io is the only real choice

If your team want to write lots of code, take dbt core + Cloud. Best option if you’re not on Snowflake by a mile

Storage / Warehouse

BigQuery or Snowflake. These are the most popular and well-covered platforms. You will find engineers with good familiarity of these platforms where you might not with others. They are also highly interoperable with other tools

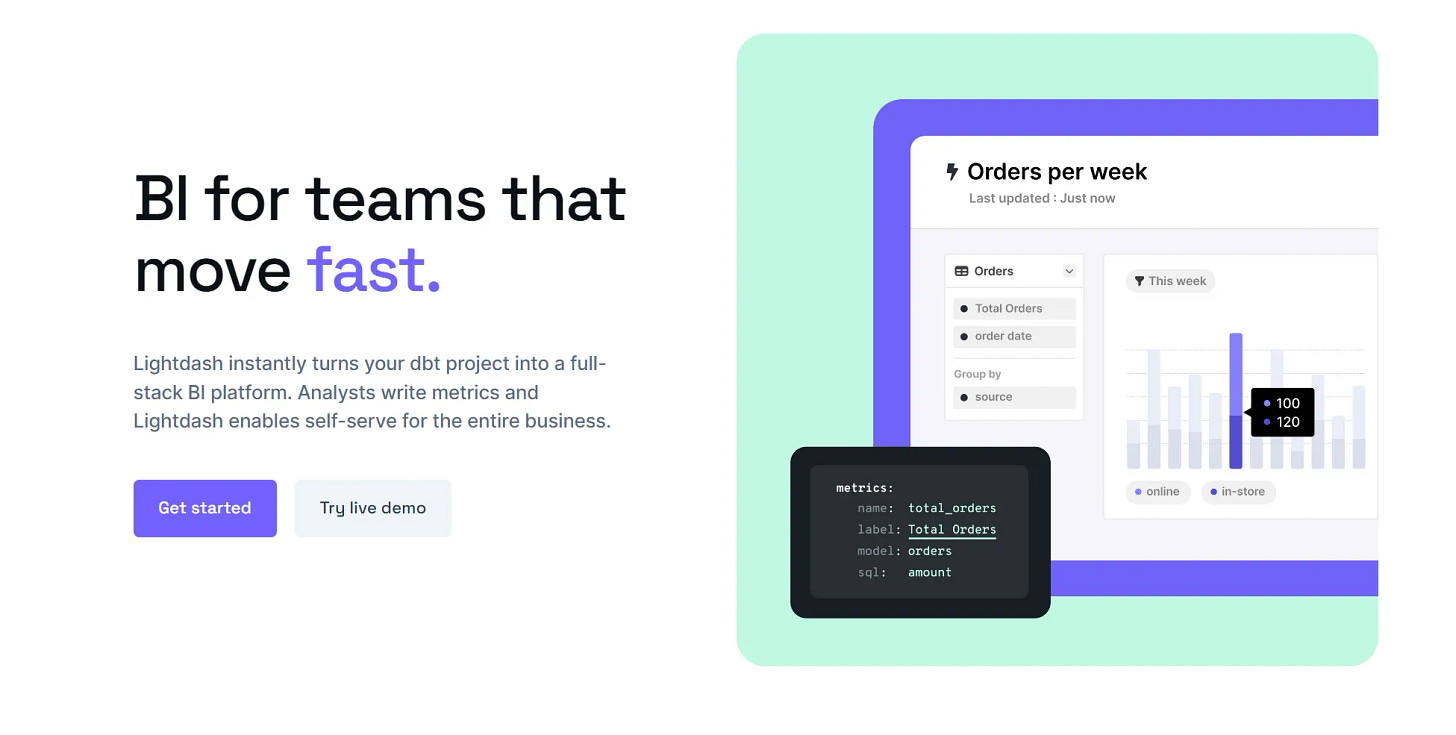

BI

There are a whole host of vendors, so the key here is just choosing one that fits with your warehouse. Lightdash is a great option.

Please don’t spend more than $20k a year on this!

Orchestration and Observability (this is a plug)

Use Orchestra. This is by far the quickest, most effective and economical option with the stack used above

With Orchestra, anyone in the data team can build / access-control view data pipelines end to end (even if the data eng team who maintain streams and connectors are separate to an analytics team who write SQL or use Coalesce)

Orchestra is highly interoperable with data tools, which means adding a new component of your stack does not require engineering time spent writing code

Orchestra has full operational lineage and observability, which gives teams early access to suites of information traditionally gated by enterprise-focussed and expensive observability-only platforms

Environment management (particularly in conjunction with Snowflake) is handled by Orchestra so you get the rigorous dataops without having to maintain multiple airflow instances across yet more elastic infrastructure

Summary

With these pieces of tooling in place, any data team can reliably and efficiently move and transform data. With the above stack, you can feasibly:

Ingest batch data at a cadence

Support streaming use-cases (streams into S3 + Snowpipe + Dynamic Tables or Snowflake Streams)

Easily build transformations

Have BI, even as code

Not spend more than $50k a year

In this example, I’d expect the costs to breakout as follows in a year:

Fivetran / Portable a few hundred $ a month, if not free

Airbyte ^ same as above

Streams yourself: Maybe $500 a month

BI: $5k upwards for managed, depends on users, free if you self-host lightdash

dbt: $1.2k; you only need one developer seat

Snowflake: $20-$30k depending on use-case

Orchestra: see pricing here

Total $37.2k — $47.2k depending on usage and if you’re doing streams

With the added advantage that you don’t really need a huge data team to make this work. Setting up streams yourself can be done by a software engineer, and Snowflake etc. requires a cool head and a bit of SQL knowledge but that’s really about it. Everything above is also about 50% the cost of a decent data engineer, so it’s vastly cheaper and faster than hiring someone to build everything open-source.

The final point to note is — this is a run-rate cost, which is the cost of your data operation at its most mature when it’s absolutely flying. If you focus on delivering the most promising initiatives end-to-end, and doing this successfully, then you’ll be spending far less than this and creating far more value in the short-term. Using a platform like Orchestra is a huge advantage here, since it’s very easy to convey the value of a data product due to the helpful metadata collected (cost, usage, etc) — I imagine convincing your CEO the data team has value by showing them the Airflow UI is probably impossible.

I hope you enjoyed this guide. Please don’t take any of this as gospel, remember articles on medium are opinions and at the end of the day, we’re just discussing data tooling. The above mentioned costs will of course vary dramatically based on individual circumstance but what’s certain is the setup time with this is very very quick, and done right, you won’t break the bank 💰