Software Engineering Approachs for Data Engineers: the biggest mistakes early data teams make

Why you need Orchestration at a minimum from day 1

I often get asked if small or early data teams really need orchestration.

I think this question is a good candidate for the software engineering to data engineering analogy.

When you develop software, the standard of the product or the code you ship needs to be extremely high. This is because if software breaks, you get angry customers and breached SLAs, which have material revenue impacts.

As a result, software code gets tested in different environments before it makes its way to production. Code gets built, checks are run, more code gets built, and checks are run.

It’s not the case that checks run first, and then new code gets built. That doesn’t make any sense!!! Why would you test stuff first before implementing changes? It is almost so illogical it’s impossible to explain.

The point is software releases are orchestrated, monitored, and happen over different environments. This makes the end to end incredibly robust.

Ok, now let’s imagine what most people do in Data.

Fivetran or ingestion scripts pulling data directly into..

Production database, with one version of thedata

dbt running ontop for transformations

No orchestration or monitoring apart from whatever is native

You can see pretty easily why this is, quite frankly, an amateur set-up. If there are data quality issues or a process breaks, you’re going to run dbt or your transformation tool (e.g. Coalesce) and you’re going to create all that new data anyway. The data could be wrong, it could be missing, you have no fucking clue

And the best bit is neither will your stakeholders. Apart from they will - because they’ll be looking at a dashboard with an empty bar for the latest day and be thinking “Damn that New Head of Data has ballsed up again”

With some basic orchestration, you can replicate the reliability of software release cycles and avoid this situation.

So yes - there are 7 “reason” why Orchestra is perfect for small teams, but the real takeaway from this article is that if you think Orchestration is dispensable you are wrong. Orchestration is not some nebulous pseudo category like “Data observabiltiy” or “Data Quality Monitoring” - Orchestration is the data equivalent of Github Actions. If you ask any software engineer “Can you do your job without Git Actions” they will laugh in your face.

So don’t be a dummy. Don’t be put off by the fact Airflow is a massive investment for a single person data team, or a growing one. Just start by use Orchestra, and move away later if you don’t like it.

Introduction

At Orchestra, we work with Data Teams large and small — anywhere from massive enterprises to single-person data teams in tech start-ups.

Part of our mission is to make data engineers more productive by reducing the amount of time spent on menial tasks like writing data quality tests, manually rerunning pipelines, debugging failures and of course — UI.

Wanting to avoid these problems is a common reason many Data Teams that are just starting out want to start with full orchestration and observability (“All the bells and whistles”) but find it too expensive.

This results in a painful naissance for an early-stage data team. In addition to building the platform and serving stakeholders, pipelines are also fragile and debugging is painful. This leads to lost trust from the outset and the dreaded “The numbers don’t look right. Can you check?” request.

It’s only a problem if someone notices? Why Data Teams need to recalibrate

You miss 100% of the shots you don’t take

In this article we’ll cover why Orchestra is a great choice for both early-stage data teams and more mature ones.

At first sight you might think it’s counter-intuitive for a SAAS solution to appeal to two wildly different demographic groups. Why should a massive team of a hundred engineers need the same thing as a single-person start-up?

The answer comes down to flexibility and scalability. Orchestra is pioneering modular architecture, which means it is the only orchestration tool that scales as organisational patterns become more complex. You shouldn’t have to change tools as you grow, which is why we’ve designed the platform to fit the needs for every data team.

Why Orchestra is a great fit for early-stage Data Teams

Our good friends at LEIT Data predict that organisations typically spend about 2 years and take roughly 3–4 attempts at getting the data platform right. There is no reason to take this long, and Orchestra can greatly ease this journey and does so in the following ways.

No Infrastructure to Manage

Orchestra is a managed service which means all you need to do is sign-up and you can get building right away.

Alternative: hire a platform engineer who is able to provision CI/CD Pipelines, Cloud infrastructure (e.g. Kubernetes) and understand complex orchestration frameworks (e.g. Airflow)

Other alternative: you can use a managed version of a complex orchestration framework, which puts them on a par with Orchestra but of course, you need to pay for that (more below).

Build Pipelines Rapidly and Declaratively

Orchestra has world-class UI that allows you to build pipelines declaratively extremely quickly. This is up to 80% faster than writing Airflow code (or similar python-based code).

The UI is used to generate .yml code which enables a powerful, partially asset-based orchestration framework that is code-first and integrates with your git-provider.

This means you get the benefit of code with the speed of a UI, as well as a tool that appeals to both a technical and less technical audience. This in turn mitigates future risk, depending on what organisational pattern is pursued.

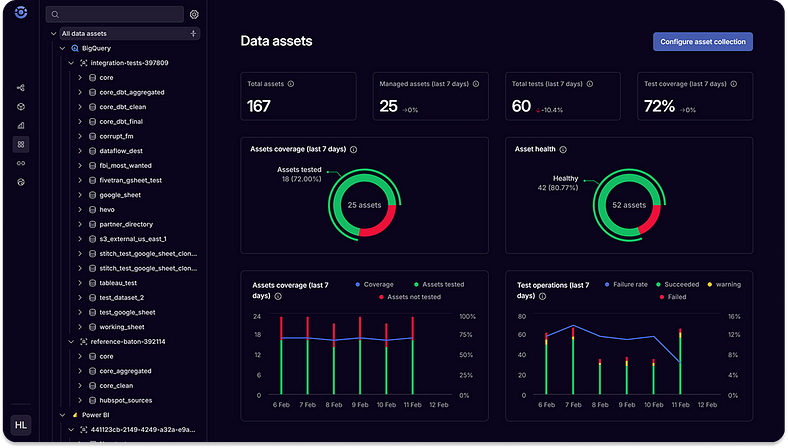

Automatic Cataloging, Lineage, Data Quality Monitoring, Metadata and Alerting

Data Teams typically want to do the following things to make maintaining pipeline easier, but don’t have the time early on:

Getting end-to-end lineage of processes to easily debug failures, identify affected data assets like dashboards, and automatically rerun pipelines from the point of failure

Cataloging: aggregating metadata at a table level to aid discovery and answer the “When was this data last refreshed? Is it up-to-date?” question

Data Quality Monitoring: ensuring pipelines are healthy is imperative. But as Data Engineers, our goal is to ensure datasets and data quality at rest is up to standard too. This typically requires yet more code or another tool

Metadata aggregation: you are running a dbt™️ pipeline but then you realise that the simple message

dbt command failedis unhelpful, so you write a pipeline to fetch artifacts, parse them, store them and aggregate them. You realise you need to do this for any other system too, from AWS Lambda to Azure Data Factory to Matillion to Coalesce. This is lots of code you need to write yourself — Orchestra does this all automatically — this metadata is imperative for swift debugging.Alerting: basic alerting in tools is fine, but wouldn’t it be nice to centralise all your alerts? After all, being able to democratise pipelines and bring in the owners of data (like sales ops heads or finance folks) is imperative in the early days. Instead of building this yourself and directing users to a highly technical data engineering specific overview, the Orchestra UI is designed to be a simple place anyone can use to understand data quality and debugging steps.

Scalable compute infrastructure and simplified Architecture

Like Python-based Orchestrators, you can run python scripts and dbt-core™️ in Orchestra.

This means you don’t need to worry about provisioning resources in your cloud provider to run python scripts or getting an additional tool just to run dbt-core / your transformation framework.

Orchestra integrates with other sources of compute too, so over time when it makes sense to leverage different compute sources for efficiency gain, Orchestra supports and actively encourages this.

No Lock-in

It might seem counter-intuitive that using Orchestra eliminates lock-in.

A Modular Data Architecture means Orchestration, Observability and Monitoring is separate from data processing. This naturally makes switching the orchestration engine easier.

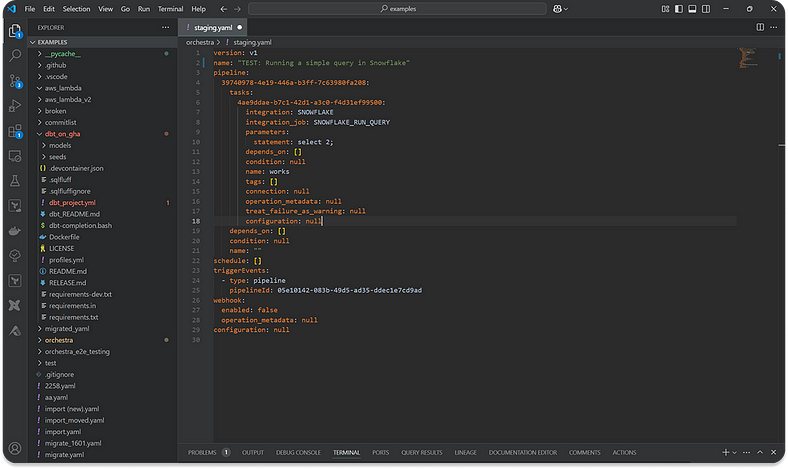

Orchestra is super lightweight — the only code that gets written is a .yml file:

version: v1

name: "TEST: Running a simple query in Snowflake"

pipeline:

39740978-4e19-446a-b3ff-7c63980fa208:

tasks:

4ae9ddae-b7c1-42d1-a3c0-f4d31ef99500:

integration: SNOWFLAKE

integration_job: SNOWFLAKE_RUN_QUERY

parameters:

statement: select 2;

depends_on: []

condition: null

name: works

tags: []

connection: null

operation_metadata: null

treat_failure_as_warning: null

configuration: null

depends_on: []

condition: null

name: ""

schedule: []

triggerEvents:

- type: pipeline

pipelineId: 05e10142-083b-49d5-ad35-ddec1e7cd9ad

webhook:

enabled: false

operation_metadata: null

configuration: nullSo let’s take an example. Let’s say you are moving some data to Snowflake using 10 Fivetran Jobs, 5 Python Scripts. You’re then running a Snowflake Task to Load data from S3 to Snowflake, and then you’re running a dbt-core™️ project to transform data.

Orchestra is just stitching these jobs together. Let’s say you wanted to move to Airflow for whatever reason, it would be super easy:

Fivetran jobs require no changes; just add them to a Task Group in Airflow

5 Python Scripts just get copied across

Snowflake Task just copied across using the

SnowflakeOperatordbt-core™️ project gets copied in Airflow project directory and executed with

BashOperator

Which is exactly what you’d do if you were starting from scratch i.e. there was no lock-in. You can see immediately how this is easy to port away, if Orchestra isn’t the right fit.

No web to untangle: moving away from Orchestra is just a question of shifting python files, a dbt-core™️ project, and references to components of the stack that already exist

No Fixed Cost fallacy: because developing in Orchestra is so quick, you won’t feel like it’s a huge burden to move away

Move to any architecture:

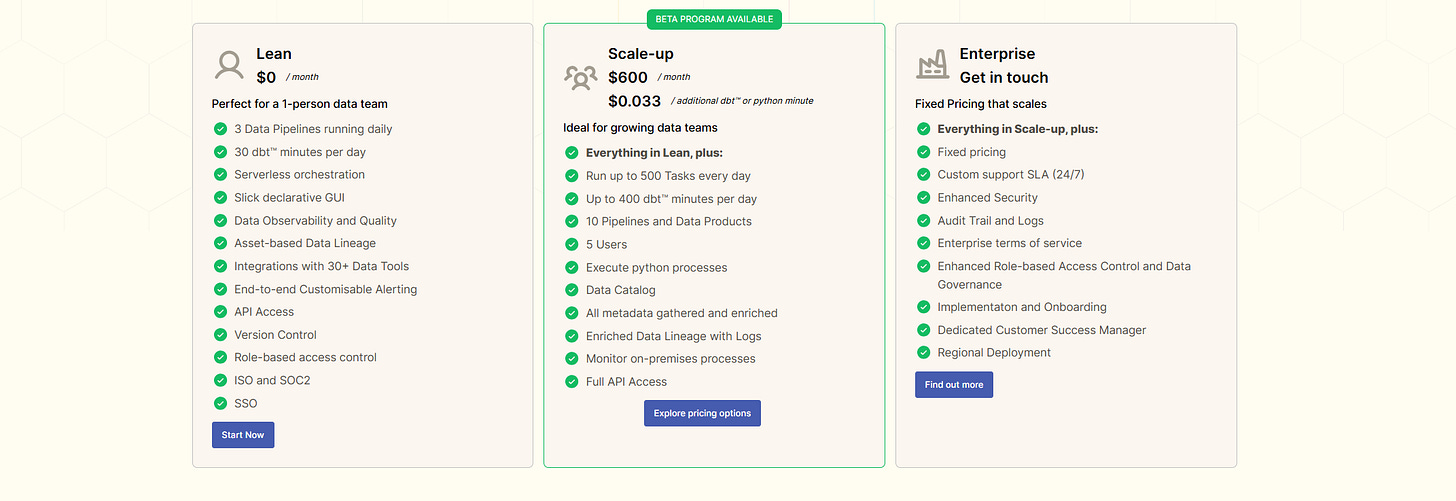

Scalable Pricing

Orchestra has a Free Tier which lets you run daily data pipelines with multiple tasks, and includes dbt-core™️ and python too.

This is a great option for anyone starting out. At a greater scale, the Scale-up Plan lets you run more pipelines, more tasks, and longer-running python/dbt-core™️ workflows.

As you grow, Orchestra offers fixed pricing where we will work with Data Engineers to understand their needs over the course of a year or longer, and we provide fixed pricing for that term.

This ensures:

Transparency: you are not wondering why you’ve been charged for 1,000 data assets and a usage-based consumption bill that is off-the-charts

Predictability: you know how much you will be charged

Scalability: ability to move prices up and down / limits to fit your needs, ensuring you aren’t overpaying

Cost/TCO savings: it should go without saying but for start-ups and small data teams, the cost savings and reductions in Total Cost of Ownership are reduced dramatically by Orchestra compared to other Orchestration Tools

Enterprise features and security

We do not gate features like environments, SSO or other features because we believe all data teams should be able to perform at the highest level without having to commit to enterprise-sized contracts.

As such, all accounts can use role-based access control, Single Sign On and Environments to ensure robust practices from day 1, and to avoid security being an afterthought (accompanied by an angry central DevOps or IT team).

Conclusion

Orchestra is a unified control plane for Data and AI Workflows that is ready-to-go from day 1. We saw how there were 7 key reasons it makes a great fit for lean or early stage teams. These are:

Managed Infrastructure

Rapid declarative pipeline building

Automated metadata aggregation

No Lock-in

Scalable compute architecture

Scalable Pricing

Security

By providing a declarative orchestration framework together with an execution environment for python and dbt-core™️, Orchestra gives Data Teams everything they need to get started from day 1.

Unlike other orchestrators, one of the greatest benefits is flexibility. You can use Orchestra to do everything, but Orchestra has first-class support for over 100 integrations in the data space.

Furthermore, Orchestra has granular role-based access control and support for different environments, so when your organisation scales to many teams, you can still use Orchestra to stitch together different parts of the stack.

To find out more, why not give the platform a spin? Otherwise: