The Data Hierarchy of Needs

How Data Engineers can help prioritise different parts of engineering pipelines

About Me

Hello 👋 I’m Hugo, CEO and Founder of Orchestra, a platform for data orchestration, observability and operations ⭐️

Introduction

At university I was obsessed with this

There is a person called Maslow and he came up with a Pyramid-type thing called the Hierarchy of needs — see image below. You’ve probably heard of it.

A hierarchy of needs is completely contrarian to economic principles, that suggest individuals maximise a bundle of goods or things by trading them off for each other. The pyramid says that each bottom layer is necessary for enjoyment of the top layer. Or at least, every bottom layer affects the enjoyment of every layer above it (you’re less likely to care about what your colleagues think about you if you’re hungry every day, for example). This means you can’t straight trade off thing A for thing B, because trading off a bit of A affects your enjoyment of B beyond the additional amount of B you get by trading off A for it.

Aside from making us all feel incredibly lucky, this also made me realise data can be structured in the same way.

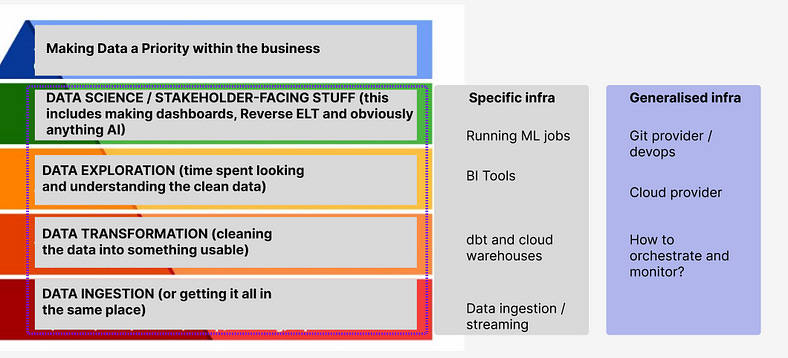

To explain this a bit more:

Top level: you can never convince your CEO to invest more in a data function if they aren’t seeing results (tangible, measurable improvements in metrics people care about) across different people in different orgs.

Data Science: high value use-cases are incredibly difficult to get value from if the data pipelines are flaky and the data quality is poor. People simply lose trust, or models make bad predictions, and it’s very difficult to move forward

Data exploration; you can’t do good exploration without nice, reliable, clean data. You can try to explore bad data, but inevitably you won’t fully understand what is going on

Data Transformation: you can’t do good transformations if the data isn’t in the same place. Literally impossible.

Data Ingestion: isn’t this what people did 20 years ago

What’s interesting is that different levels have different levels of necessity. Data ingestion is fundamentally necessary for Transformation, but not exploration (you could, for example, download some CSVs and do exploration in an excel file).

Data Science and High-value use cases also necessitate the steps below, but not completely. For example, you can get away with not doing data exploration and just rolling out predictive models, but you’ll probably struggle to explain why you’re doing what you’re doing or why it works / doesn’t work.

The biggest similarity I see, though, is in the top level: convincing people data is a good idea. In Maslow’s original hierarchy, the top-level is “self actualisation”: being the best you can be. This is something many people strive for, even people who don’t necessarily have all the other “steps” in the hierarchy 100% locked down (which I also don’t and think is completely fair).

Within data, it’s so important to get buy-in of stakeholders that Data is a good idea, and sure, it’s hard to do that without evidence, but a few well-functioning dashboards or exploratory data insights can be enough.

There’s a possible exception: for certain use-cases, you may not even need to follow the hierarchy (think about 0 ELT tools, that just “get the job done” — this is a bit of a moot point though, because those tools are following the hierarchy).

Do you buy infrastructure in the right order?

What follows from this is that you should first buy an ingestion tool, then a cloud warehouse, then a BI tool and so-on.

This is where the analogy breaks down a bit — there’s no reason you can’t do some good planning for the entire stack. Something I often see is data engineers jump to the specific infrastructure; the infrastructure that operates at the data layer, really quickly. They don’t think about the general infrastructure they need to implement the whole thing.

How will teams version control stuff? Does the Git Provider integrate with your devops provider? What Cloud Provider will you use for ad-hoc tasks or custom infrastructure? How will you trigger, orchestrate and monitor all of these systems?

And even more pressing — what about security? Can this all be hosted in the relevant subnet to ensure compliance with your software team?

What’s the point

The point you make here is two-fold:

Data pipelines are really really important for deriving business value. They have no inherent value, no-one sees them, they are incredibly complex, but they are fundamentally necesary and possibly sufficient for achieving the goal of making data a priority in a business

Blindly following the hierarchy of needs on the grounds this is a perfect analogy would be a mistake. There are some important architectural considerations to make when choosing infrastructure, particularly how the data stack fits with the software stack + orchestration and monitoring is done. Picking these winners upfront will help you ascend the hierarchy faster