Why Private Equity might be the Ultimate setting for Data Platforms

How Funds can generate better returns on investment in rolling out scalable data platforms in portfolio companies

PE Firms are in an interesting position. They can use data to get an edge over other PE firms, but as out-and-out operators who typically take decent sized stakes in companies, they often exert a lot of control over how the businesses are run.

One area hitherto untapped en masse is the implementation of a data platform within portfolio companies. Given much of the low hanging fruit like operational analytics and sales funnel tracking is uniform across companies, there should be clear paths to implementing a powerful and genuinely value accretive platform.

Today, this is quite a technical exercise, that requires the hiring of many data engineers who need to be quite technical. This type of hire is difficult to attract in a PE setting. However, it need not be - the last technical hurdle, after all, is orchestration.

So, while throwing many highly skilled platform engineers at every portfolio company to copy and paste a readymade data platform written in Airflow (or similar) will work; there is a better and cheaper way, which is to use a managed orchestration platform.

The return on investment would be immense - the data platform leads for a PE company can design the platform, specify the use-cases, the tooling etc. They may even be able to negotiate discounts with a specific vendor.

When an acquisition occurs, the company’s data function pivots to the readymade, easy-to-use data platform, which is optimised to serve specific use-cases that we know drive business value. The Data Team is not an isolated group of silent engineers building infrastructure knows about - no instead they receive an almost god-given mandate to execute on key business priorities demonstrated time and time again to be aligned with the goals of the business, and are key enablers that are vocal in an organisation that are driving positive change.

I think this is really cool and has a lot of transferrable skills for those of us working to a “Central Data Platform” team model, where engineers are the stewards of the platform others can use.

Article below.

Introduction

AI is but the tip of the iceberg in a long and ongoing story of inevitable digital transformation that is being ushered in by waves and waves of improving technology.

At the heart of everything is efficiency and automation. How can we automate arduous manual tasks? How can we improve the process of carrying out these manual tasks?

Everybody falls into data one way or another. An extremely common path is for someone working in an operational division of a company like finance, marketing or perhaps sales ops to become the “resident data person”. Borne out of a need for automating repetitive processes, things swiftly get out of control.

I have spoken to countless Data Leads who relay something along the lines of:

The company has raised an additional round of funding or has been acquired, and is now poised for future growth. To facilitate basic analytics and answers to basic questions like “How is the Sales Funnel doing?” Or “Who is using my Product and how much?”, the company hired a data analyst and a data scientist. The infrastructure is now not scalable.

- Data Lead, every Growth-stage company

Private Equity have long-known the importance of improving processes. Historically, management consultants like Bain, BCG and McKinsey would be brought in to conduct a “Project” — essentially a review of existing business processes and how they can be improved. Actions would typically result in basic suggestions such as getting a CRM, tracking fundamental KPIs in excel, or constructing some basic workflows like reaching out to leads who display an interest in products through a sign-up form.

Build, Implement, Rinse, Repeat. This is the Consultancy Way.

Mid-Market Private Equity funds like Livingbridge, Bridgepoint and others are now seeing there is a better way. The rise of the Modern Data Stack means there is now a rinse_repeat_v2 available to Private Equity Operators.

This way suffers from one key issue that currently costs companies across all verticals hundreds of thousands of dollars in wasted investment, from insurance to eCommerce to manufacturing. It also includes undue risk, wasted time — leading to a fundamentally inefficient use of Capital.

The Current V2 — the Data Platform for a Modern Private Equity-backed company

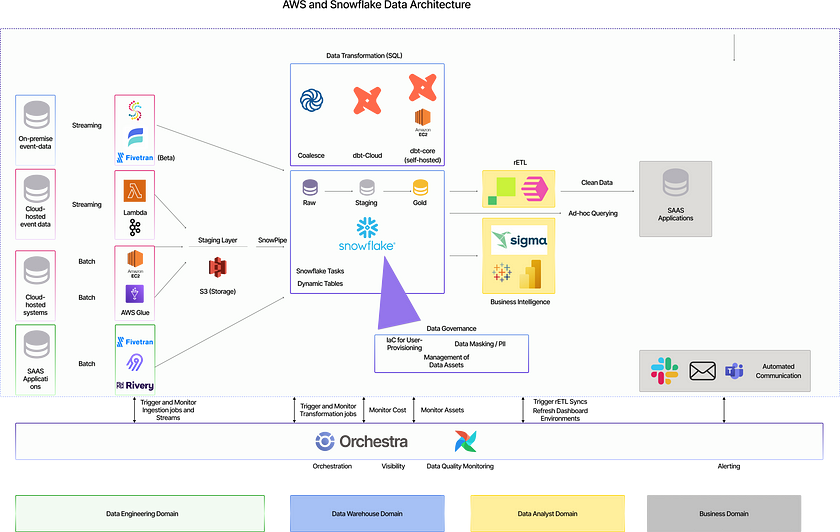

The data stack for a private equity backed company on AWS might look something like this, at its most complicated:

There are a few common pitfalls with implementing something like this.

A lack of prioritisation

Self-Service BI is the idea that you can have a data platform team make data available to the business, and the business will know what to do with that data.

What many Private Equity Operators do not realise is that this idea is a theoretical one, and in practice has an incredibly low success rate.

The idea that business users are able to accurately decide what to do with the data to improve their own processes is, for the most part, just an idea and not a reality. I have worked in organisations large and small where even a little bit of direction would have gone a long way.

Furthermore, the distribution of the ROI of data initiatives is not uniform. There are some which are vastly more beneficial than others.

This is why prioritisation is key. Do not implement a Data Platform for the sake of it. Implement it to:

Automate Lead Tracking for Businesses with Sales Reps. Automating Marketing and Sales tracking can vastly improve the efficiency of sales reps. If you take a sales rep from 10 opportunities to 15 a month, then you are directly impacting top-line an an extremely meaningful way

Automating Marketing Attribution: Again, if you are able to optimise your marketing budget which could be millions of dollars, an incremental return of even 20% efficacy will translate directly to the top-line if you have a lean lead → opportunity → revenue process

Automating Finance Tasks: Mistakes in Finance can be costly. It is an incredibly underrated part of the B2B customer experience. Improving Churn by automating finance processes can be a huge boon to existing revenue

Product Usage: understand who is doing what in your product or service that you provide is probably the most fundamental thing to get right. With up-to-date and accurate information how customers are using your Product or Service, you can literally save yourselves from death by providing assurance to prospective investors that metrics are reliable.

There are, of course, a host of other use-cases.

For example, any company providing credit like a scale-up leasing devices or an insurance company needs data. Getting a more accurate picture of an individual’s credit risk in real-time means better credit decisioning can be made, which improves the firms P&L/reduces default rates and ensures more customers get access to a service. This is so fundamental to these types of businesses that failing to invest in a data platform would be a fatal mistake.

Another area would be in the fields of manufacturing, energy, and power. We (Orchestra) work with a provider of energy and windfarms. If temperatures go below a certain point, the windfarms cannot operate. The cost of failing to identify this is enormous, as forcing the windfarms to run can incur huge costs under these conditions and in the worst case scenarion, the turbines will break.

This is a clear example of a very simple action that can be facilitated by a Data Platform that has the potential to save a manufacturing company literally millions of dollars every year.

Failure to recognise the correct organisational pattern

Not all companies are created equal. I have never worked in an organisation where every employee understands the importance of data and knows how to code — however they exist. An example would be Stripe, or Facebook.

For the most part, companies backed by Private Equity are not Stripe or Facebook. Different companies require different Data Team structures:

Central team: a central data team maintains all infrastructure and fields requests for business users

Central team + BI : a central data team maintains all infrastructure and fields requests for business users. Business users are comfortable maintaining dashboards

Central Platform team: a central data team maintains all infrastructure and enables the usage of the Data Platform in a governed way to embedded analysts / engineers in-team, who field requests for that team. The Central Team may do some data modelling

Data Mesh: many teams require access to data pipelining capabilities, and the Central Team control the list of tools but there are more tools and data sharing between teams is essential

You will notice that these different stages correlate quite strongly with an organisation’s maturity, and PE-backed Companies will typically need to be in Camp 1 or 2, sometimes 3. Rarely 4 (unless you are talking about large-cap Pharma Acquisitions, for example).

Often, Private Equity Operators rush to hire central data platform teams without realising what is required. For Camp 1, some basic SAAS and handholding, with perhaps daily reports based on easy-to-model data will suffice.

On the other end, especially in larger organisations, Operators make the mistake of thinking organisations can do with many data engineers, without realising a Data Architect or Data Leader who can build out a data strategy is important.

Either way, deciding on the pattern is incredibly important.

A lack of understanding around tools

This is perhaps the mistake that is most easy to forgive, given the plethora of tools out there. Common patterns do, however exist for making stuff work.

Something we often see is that platforms rely heavily on the “one job one tool” principle. For things like data warehousing and data modelling, this uniformity is hugely advantageous as it provides guardrails and frameworks that make work more repeatable.

For Data Ingestion, the opposite is true. Relying solely on a single vendor to move data to storage is normally a disaster. Increasing prices, need for new features/speed, and just general optimality mean that often a combination of managed SAAS (e.g. Fivetran, Airbyte), streaming (e.g. pub/sub, event bus, SQS) and self-written code (Python) is the best approach.

This naturally presents an integration cost, but one that is almost always worth bearing.

Activation is another area people do not really understand. A “BI Tool” is the most important thing for surfacing insights, but there are other cheaper options.

For example, upskilling a power user to go directly into the data warehouse and query data when done properly is much more powerful than simply giving someone a dashboard to look at. Pushing data back to tools people are comfortable with like ERPs and CRMs means Sales/Finance folks don’t even need to do any upskilling to get insights they need, for example:

CRM: for a given customer, when was the last time they visited the website, what have they downloaded, what is their lead score, have wespoken to them before, have they used the platform etc.

ERP: when is this PO due, is it paid, what are the terms, what is the usage etc. etc.

Alerting, and building processes around that, can also be incredibly powerful, and doesn’t require a dashboard.

The error that costs firms millions annually

Data Architecture these days is actually pretty simple. Load data from source to target, transform data, and activate it:

Every logo in that diagram is a managed service, that any data engineer will be familiar with, bar Orchestra. So why is that here at all?

The last and final hurdle for this is stitching things together. If there is an error in the data, the last thing you want to do is send the CEO of your newly-acquired investment vehicle a report that tells them to do the wrong thing.

It is very amateur to have the first jobs runing at 1am, the next at 3am, the final ones at 5am (just to be safe), and so on. Indeed, this flakiness that so many data teams believe they can do without is one of the reasons that the uptake in Data-driven processes is so low in many organisations.

It’s only a problem if someone notices? Why Data Teams need to recalibrate

You miss 100% of the shots you don’t take

Private Equity Operators need to recognise that to make a meaningful difference, data pipelines need to be extremely robust. Trust is famously a difficult thing to build, but an easy thing to lose — convince your new portfolio employees that you are going to make things better by not giving them bad data.

This leads us on to Orchestration Tooling.

Currently, the de facto standard for data engineers has been a tool called Apache Airflow. Apache Airflow is not something you buy — it is something you install on a cluster of machines, and monitor like a hawk, all while paying AWS or GCP a non-trivial amount to run that machine.

Airflow, like all of its followers, is a very complicated framework which means that the development and maintenance required to turn it into a usable and well-integrated solution for data pipelines is immense.

It is something that eats away at the already limited hours the data team has in a day. I recently spoke to a friend at an insurance company who said “We cannot move away from [Insert OSS Orchestrator here] because the team have invested so much time into making it usable, it would kill them to remove it. The time cost is hundreds of thousands of pounds”

This was for a data team of 5.

The question here is not even around cost — it is about driving revenue. It is about using data to identify the opportunities inherent to every organisation that can meaningfull impact growth metrics. Data People are smart, and love to solve complicated problems. Would you not rather them help you drive the company forward than implement infrastructure for infrastructure’s sake?

To summarise:

Existing Orchestration frameworks are highly complex, and require specialist knowledge or lots of time investment to learn

They Require a significant amount of boilerplate code to get started with basic connectivity between tools, alerting, and visibility

They are difficult to use and preclude the idea of a data mesh or self-service data platform that less technical users can leverage

Implementation is time-consuming which results in massive opportunity cost both for the organisation (what could we have done if we had known that) and the data team (how could we have helped if we hadn’y spent all that time getting Airflow to work)

Implementation is risky — there is inevitably a level of tech debt introduced when building a data platform on a tool like Airflow. Tech Debt gets incurred, which is of course the enemy of scalability. There is also hiring risk — what if you cannot find the right people to run the project? And finally; key-man risk. When something as complicated as Airflow is introduced, inevitably the smartest person in the team takes control. What happens when they leave for pastures green?

Implementation does not include best-in-class features: there is a reason that there is a whole “new” category of extremely expensive data quality and “data observability” tools. It is because getting a birds-eye view of data pipelines and data quality at rest is not something orchestration tools for you. This is more cost, more investment, more lost time.

The second-to-last point is very important.

How to go from Analytics Engineer Platform Engineer in 2 weeks

Data Platform Engineers do incredibly challenging work — work that is probably not necessary for the company you work…

Hiring a talented platform engineer is not something which is easy to do. The Data Engineering market is niche, and despite there being many people hiring and many candidates, finding a job is difficult because the by-organisation requirements are so varied.

If you can implement a scalable but simple architectural pattern like the one above, the type of candidate and skillset required suddenly becomes much easier to hire for, cheaper, and more ubiquitous — it really is a win-win.

How to avoid falling into the OSS Orchestration Trap

Implement a Modular Architecture

Needless to say you should implement a Modular Architecture, where the things that move data, transform data, and activate data are separate.

Microservices vs. Monolithic Approaches in Data

The Microservice vs. Monolith debate rages in software, but is reduced to a gentle simmer in the data world

This is a tried-and-tested method and the modern manifestation of the ELT paradigm.

Option 1: High Skills with a ready-made platform

The first option is to ready-package the platform in a tool like Airflow yourself. The advantage here is that you (the fund) can hire some very intelligent people to build the platform, document it, and prepackage it.

You can then hire folks to implement it themselves in different organisations. Because the work is done, there is less risk of building the wrong thing or under-scoping the work.

Another option is to ensure everyone in your portfolio companies can code. If everyone in the organisation is highly technical and “gets” data, then the fact an OSS tool may not be usable to a non-technical user is largely irrelevant.

Option 2: Use a code-first, managed Orchestration and Visibility Platform

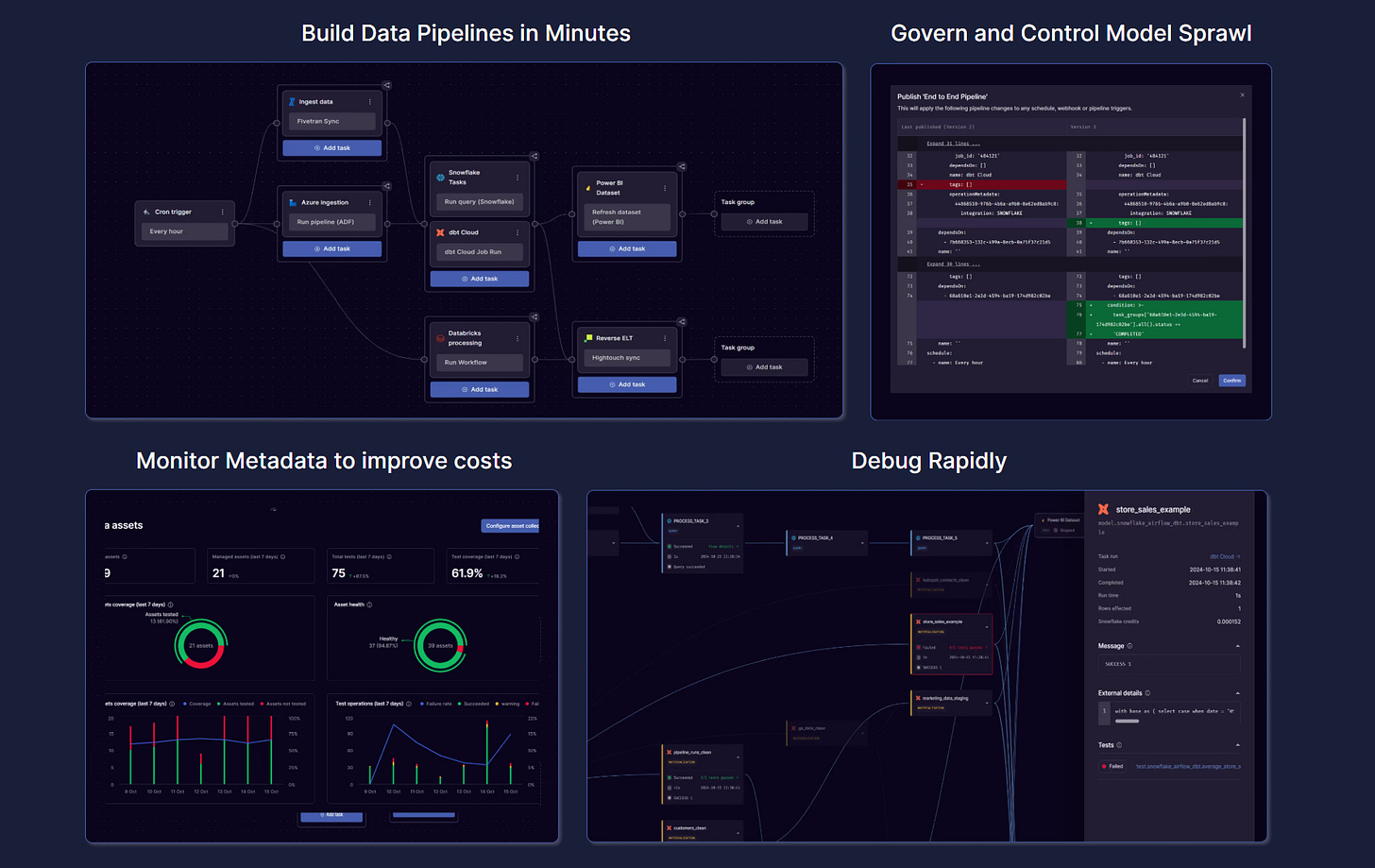

Orchestra is the first unified control plane for data. It is a managed service that includes a declarative orchestration tool wrapped with an industry-leading interface for data pipeline and quality monitoring, alerting, and governance.

Orchestra is all the hard work a data team would need to do in order to start delivering value.

Managed Service: Orchestra is a fully serverless managed service, which means data teams do not need to invest time learning docker and Kubernetes and maintaining cloud infrastructure

Built for the Modern Data Tooling: Orchestra is already integrated with data tools, cloud tools, and on-premise infrastructure packages. This means data teams do not waste time writing boilerplate code just to stitch things together with proper error handling

📚 See how Orchestra integrates modern tooling below

Automatic Metadata aggregation: Orchestra fetches metadata from all underlying processes and displays it in the UI. This saves a job for the data team, and makes debugging faster because analysts can instantly get to the root of the problem

📚 See metadata in action below

Best-in-class and code-first: Orchestra has industry-leading Role-based access control, is code-first and leverages a fully declarative framework. This enforces governance and stops projects sliding out of control, while adhering to software engineering best practices

Rapid debugging and minimal maintenance: Orchestra’s lineage view means anyone can easily debug pipelines and maintenance can even be outsourced as it no longer requires specialist knowldge to fix pipelines

📚 Rapid debugging using Orchestra’s lineage view

Data Quality and Consolidated Architecture: you can consolidate multiple tools into Orchestra as it can run python code and dbt-core code. This means you do not need to spin up separate resources in e.g. AWS to run arbirary code, and you do not need to also purchase a standalone tool just for running dbt.

📚 How Orchestra helps organisations adopt dbt

Data Quality Monitoring at rest and a data catalog are also included, that require little to no maintenance. This means data teams do not waste time building pipelines without thinking about data quality or assessing additional third party vendors or incurring additional cloud costs.

Fixed pricing: Orchestra has fixed pricing so data teams can scale without unknowingly incurring enormous cloud bills in multiple places

Results

Companies implementing Orchestra have decreased development cycle time by 60%, doubled maintenance efficiency, and even reduced Snowflake Cost with Orchestra.

They are leaner, move faster, and provide better quality data at tighter SLAs. This fundamentally means that the data team are many times over more productive and independent, and are swiftly becoming genuine assets to the companies they work for rather than cost centres.

Conclusion — a readymade Data Platform for Private Equity Operators

Despite the wide variety of data tools in the market and plethora of Data and AI use-cases, patterns are beginning to emerge that present a huge opportunity for Private Equity Operators.

One of these is the use-case for a Data Platform. Where the Data Team might historically have been a cost-centre for Operators, the promise of out-the-box, flexible platforms like Orchestra mean PE firms now have more opportunity than ever to drive businesses with Data and AI.

We have seen in this exposition that with careful planning and the right tooling, the same type of data platform can be repeatably and reliably deployed across different growth-stage companies to meaningfully accelerate revenue and reduce operational costs.

A cornerstone of this approach is ensuring data teams avoid the need to spend time writing boilerplate code and maintaining unnecessary infrastructure. While Orchestra solves a lot of these, careful choice of ingestion tools, storage layer, and robust activation will also be key to enable this strategy at scale.

PE-owned companies can also benefit here from a predefined data platform strategy that operators know works and can theoretically leverage any toolset on the market, provided it does not necessitate an overly high skills requirement.

This presents significant ROI improvements, Risk, and implementation time improvements of up to 3–5x vs. implementing a complex legacy framework such as Airflow for orchestration. It also eliminates the need for a highly technical and expensive group of platform engineers that inevitably become a bottleneck for future growth of the organisation.

If you’d like to learn more about Orchestra, please visit the Orchestra website.

If you’d like to discuss how we work with Private Equity, please reach out.

Happy building.